The Right Number of Partitions for a Kafka Topic Ricardo Ferreira Senior Developer Advocate Amazon Web Services © 2022, Amazon Web Services, Inc. or its affiliates. © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 1

Slide 2

The impact of partitions “My stream processor code in Flink worked well with 4 partitions, but with higher partitions the result is wrong.” “My K8S cluster is acting crazy since I increased the partitions of a Kafka topic. Consumer pods are dying.” “I tried to enable tiered-storage in my cluster, but it didn’t work with topics that are compacted.” © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 3

“Partitions are only relevant for high-load scenarios where parallel processing is necessary.” — Most developers out there © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 4

© 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 5

In reality, partitions in Kafka are: 1. Unit of Parallelism 2. Unit of Storage © 2022, Amazon Web Services, Inc. or its affiliates. 3. Unit of Durability @riferrei

Slide 6

👋 Hi, I’m Ricardo Ferreira • Developer Advocate at AWS. • Fanatic Marvel fan. My favorite characters are Daredevil, Venon, Deadpool, and the Punisher. • Yes, they are all anti-heroes. © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 7

How many partitions to create? © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 8

© 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 9

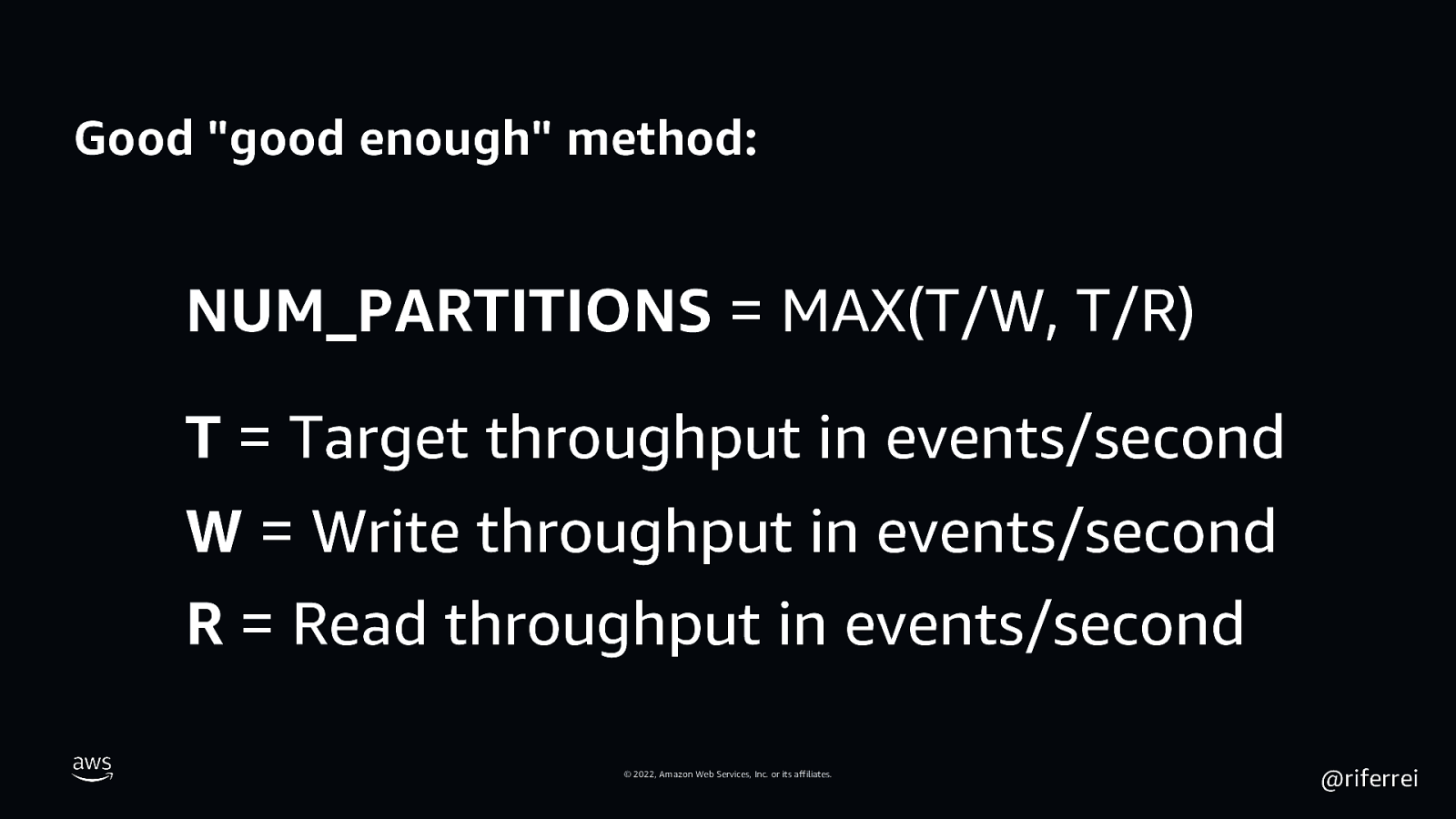

Good “good enough” method: NUM_PARTITIONS = MAX(T/W, T/R) T = Target throughput in events/second W = Write throughput in events/second R = Read throughput in events/second © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 10

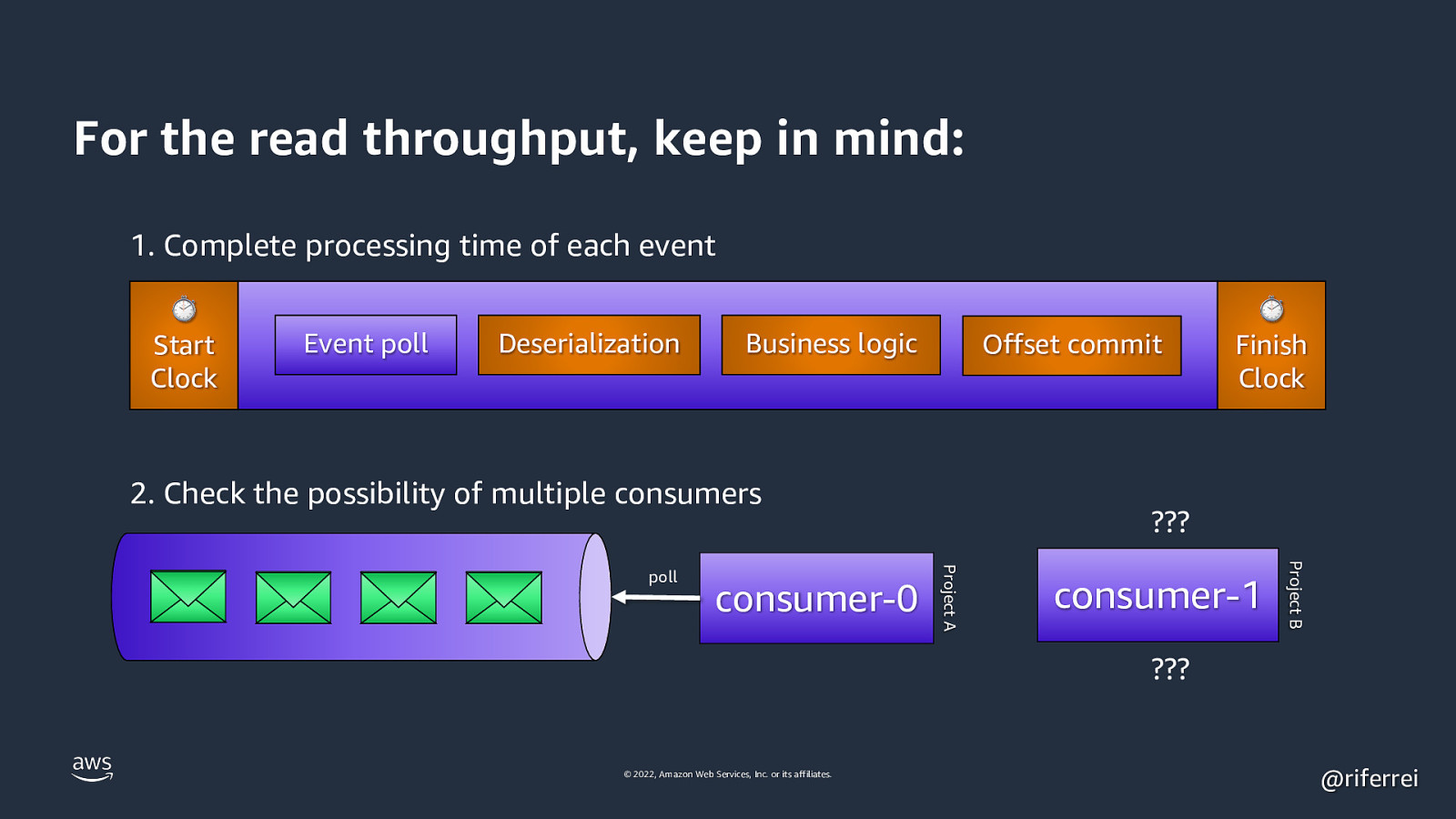

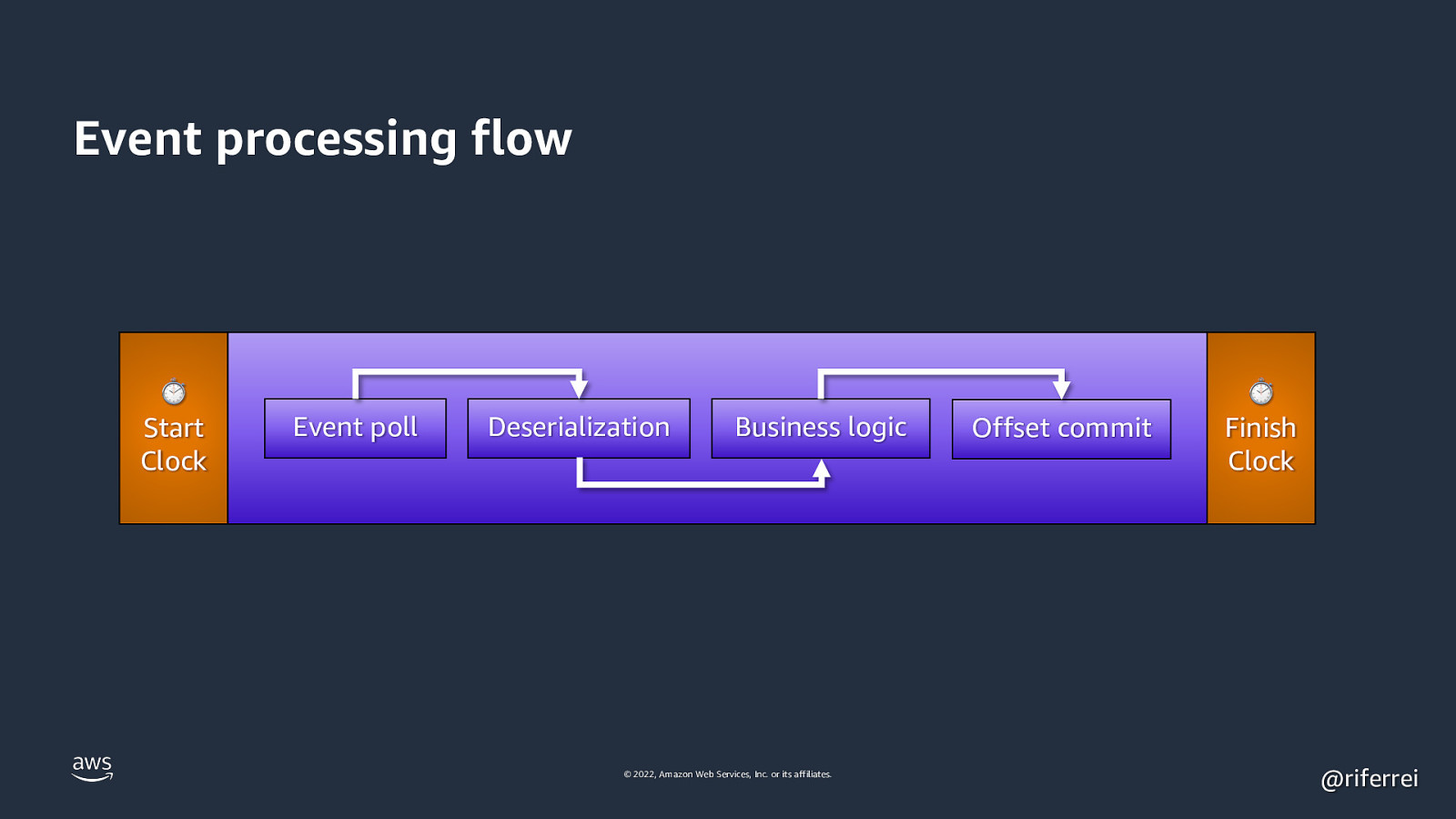

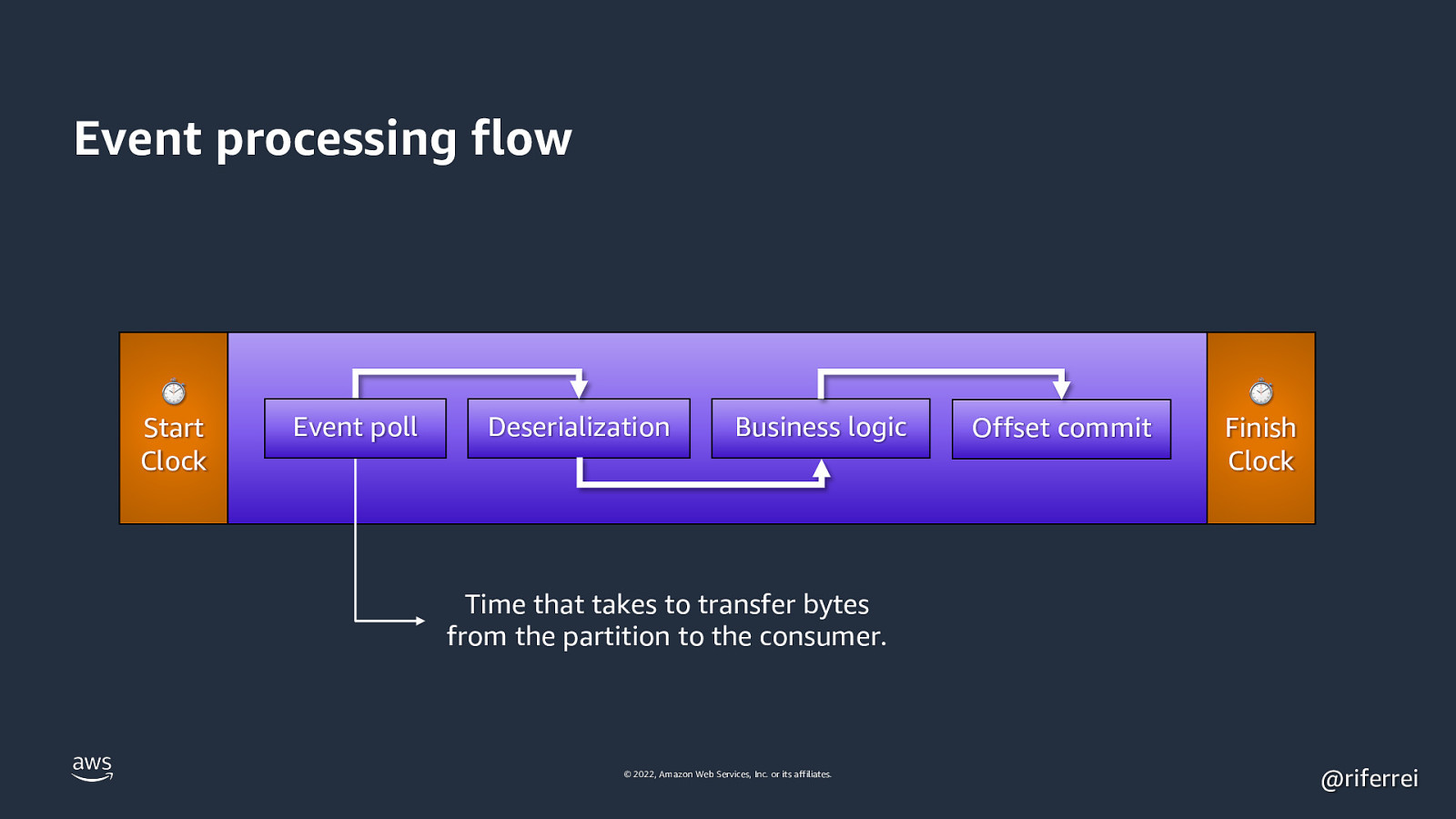

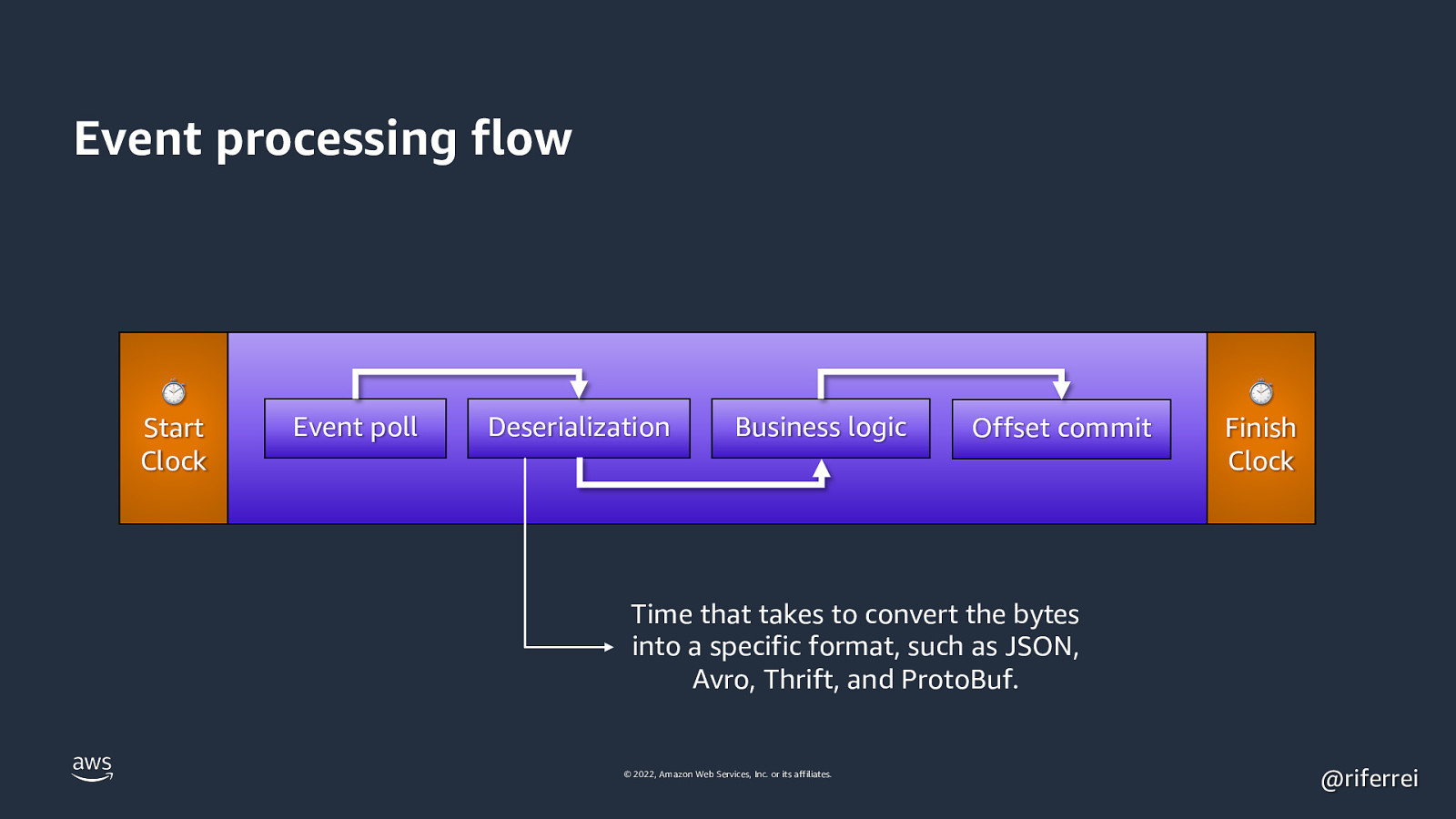

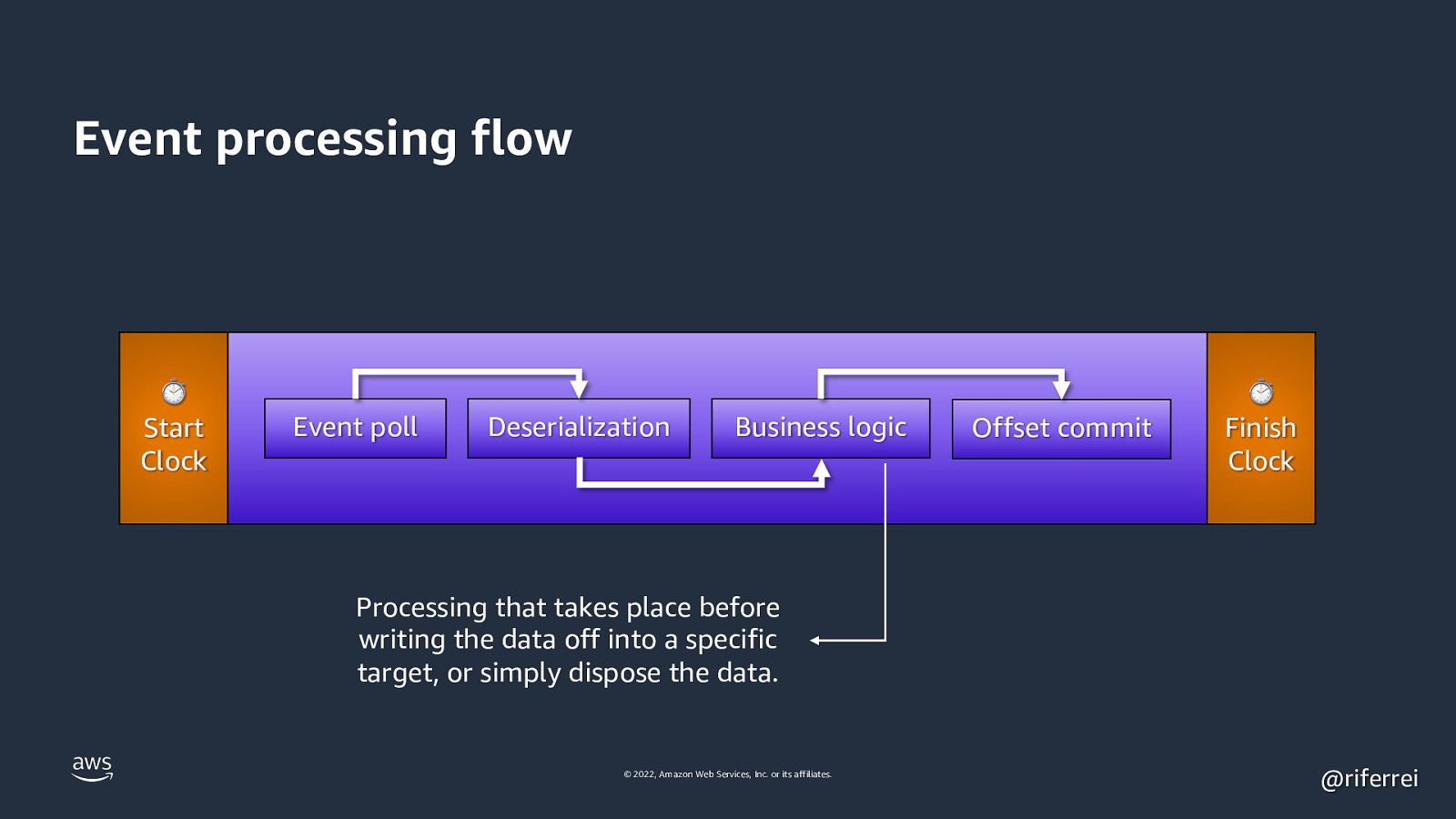

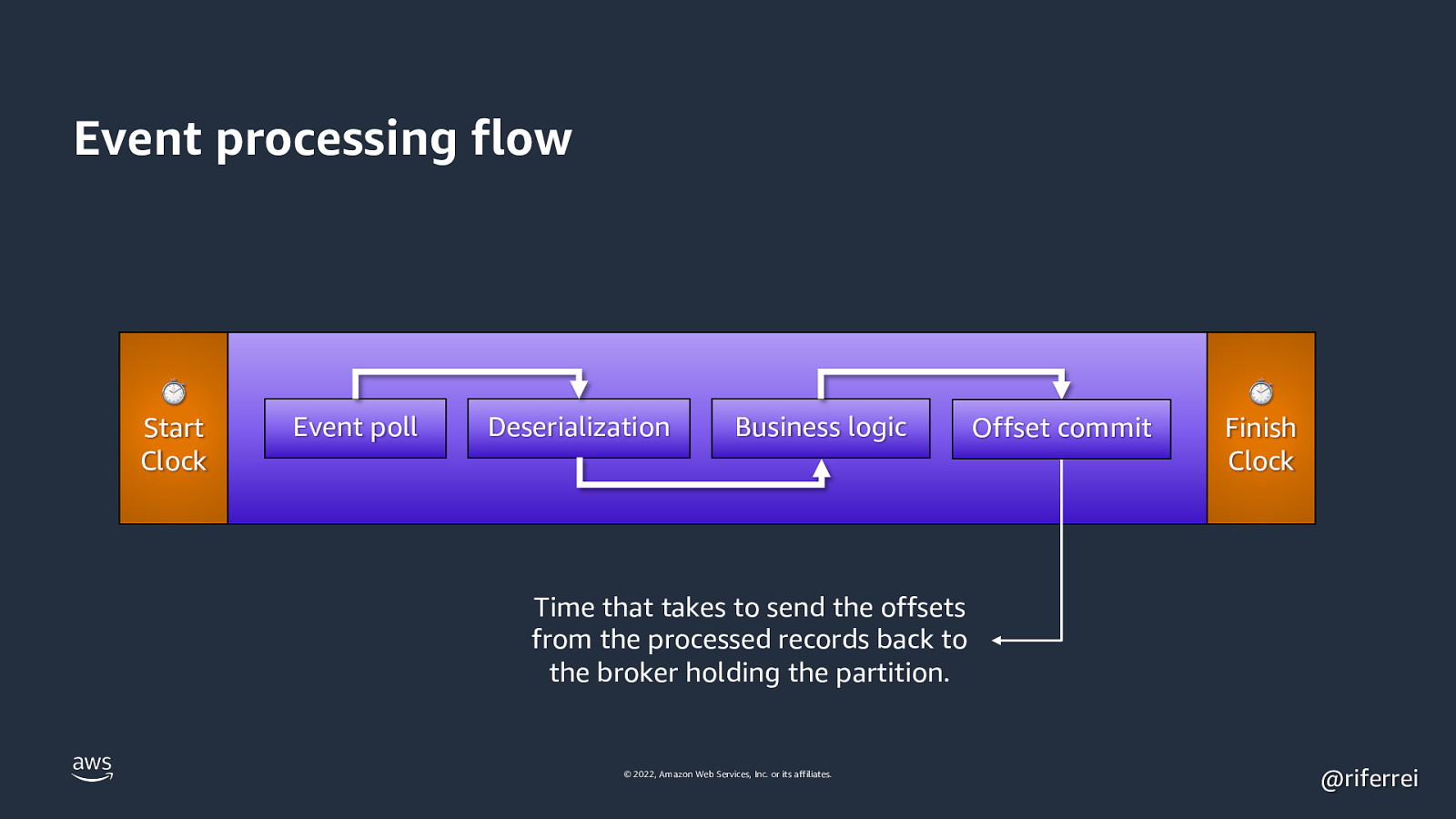

For the read throughput, keep in mind: 1. Complete processing time of each event ⏱ Start Clock Event poll Deserialization Business logic Offset commit 2. Check the possibility of multiple consumers consumer-1 Project B consumer-0 ??? Project A poll ⏱ Finish Clock ??? © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 11

Partitions as unit of parallelism © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 12

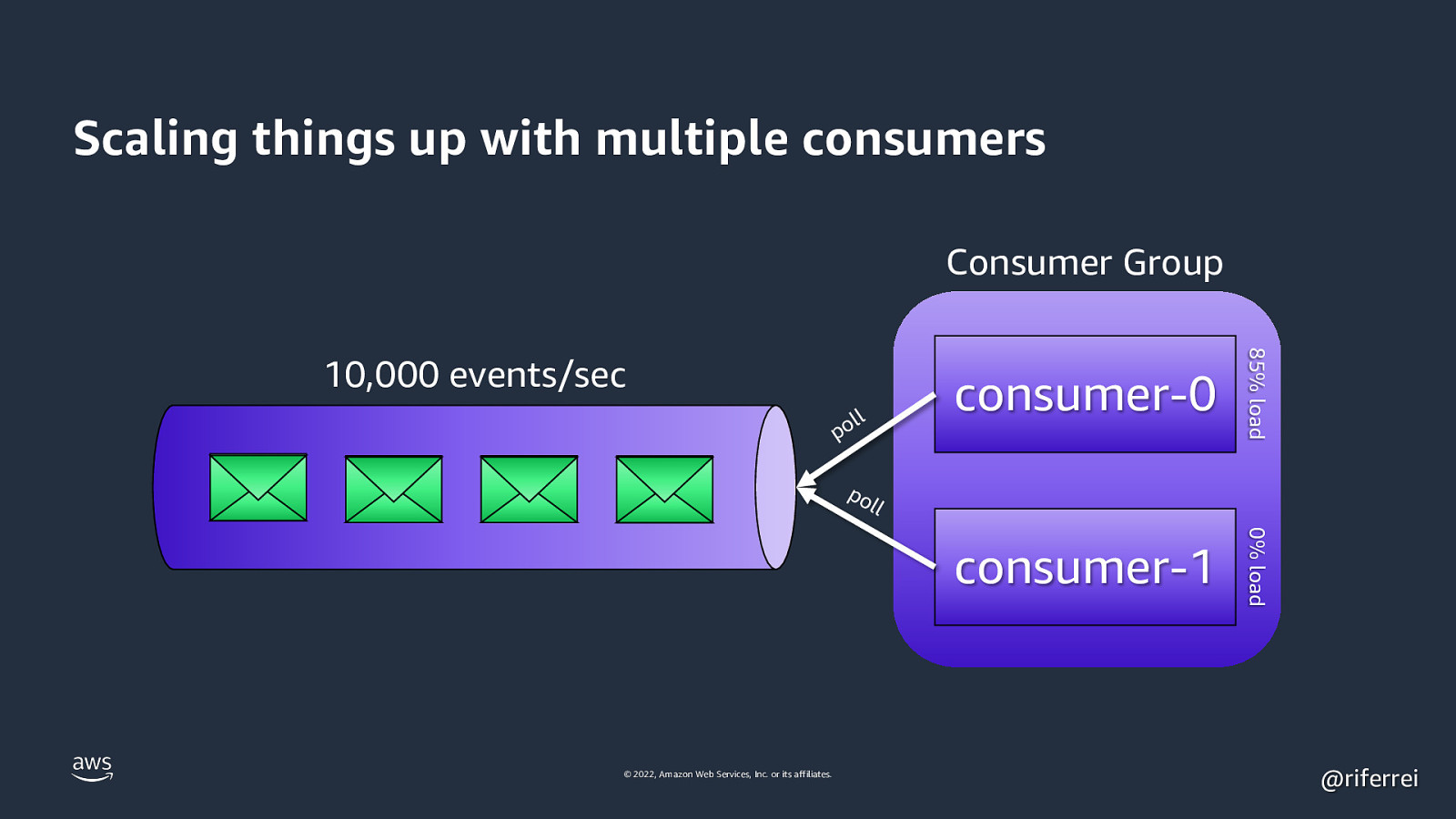

Scaling things up with multiple consumers Consumer Group consumer-0 consumer-1 0% load ll po 85% load 10,000 events/sec po ll © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 13

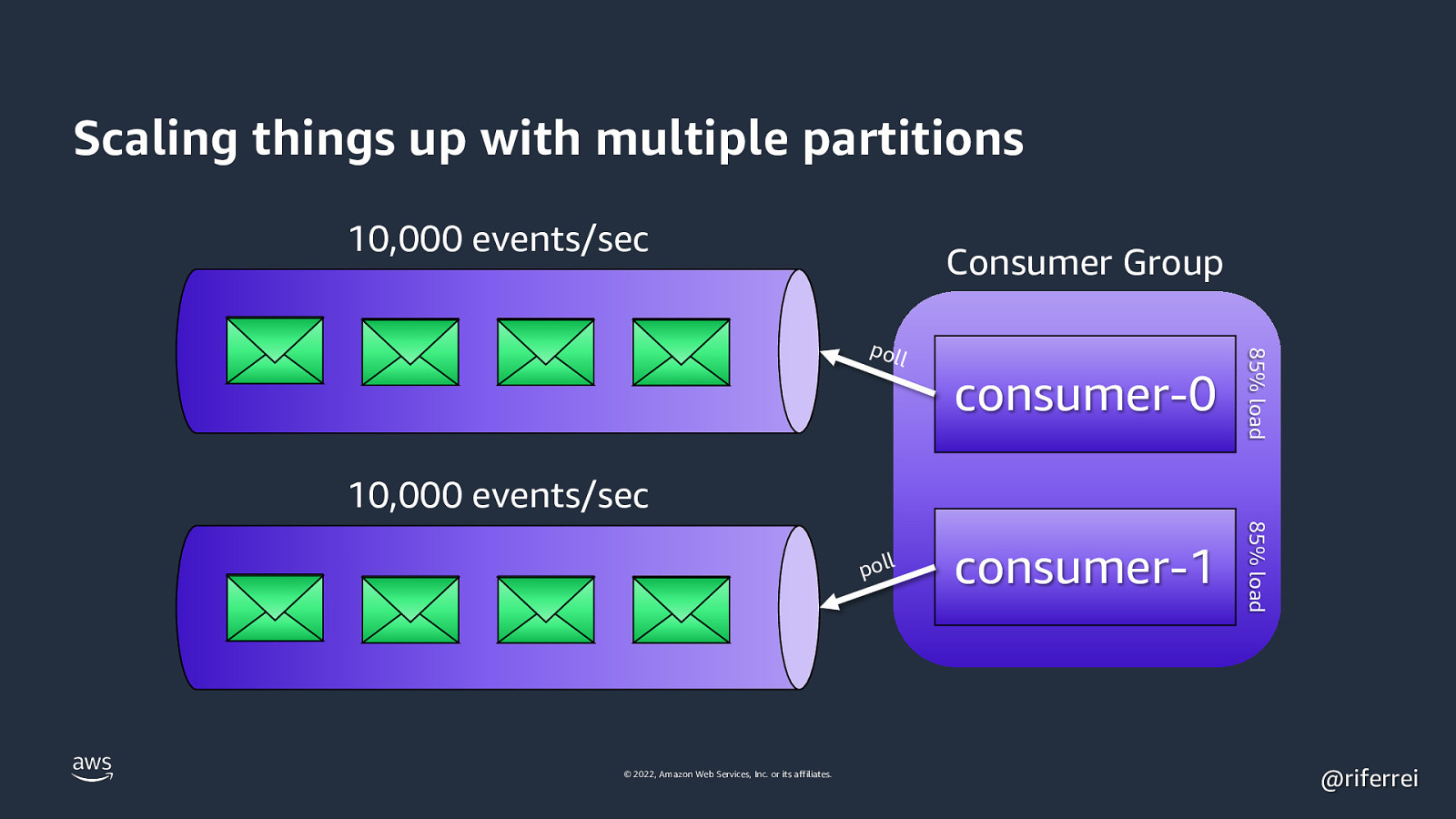

Scaling things up with multiple partitions 10,000 events/sec Consumer Group consumer-0 85% load consumer-1 85% load poll 10,000 events/sec poll © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 14

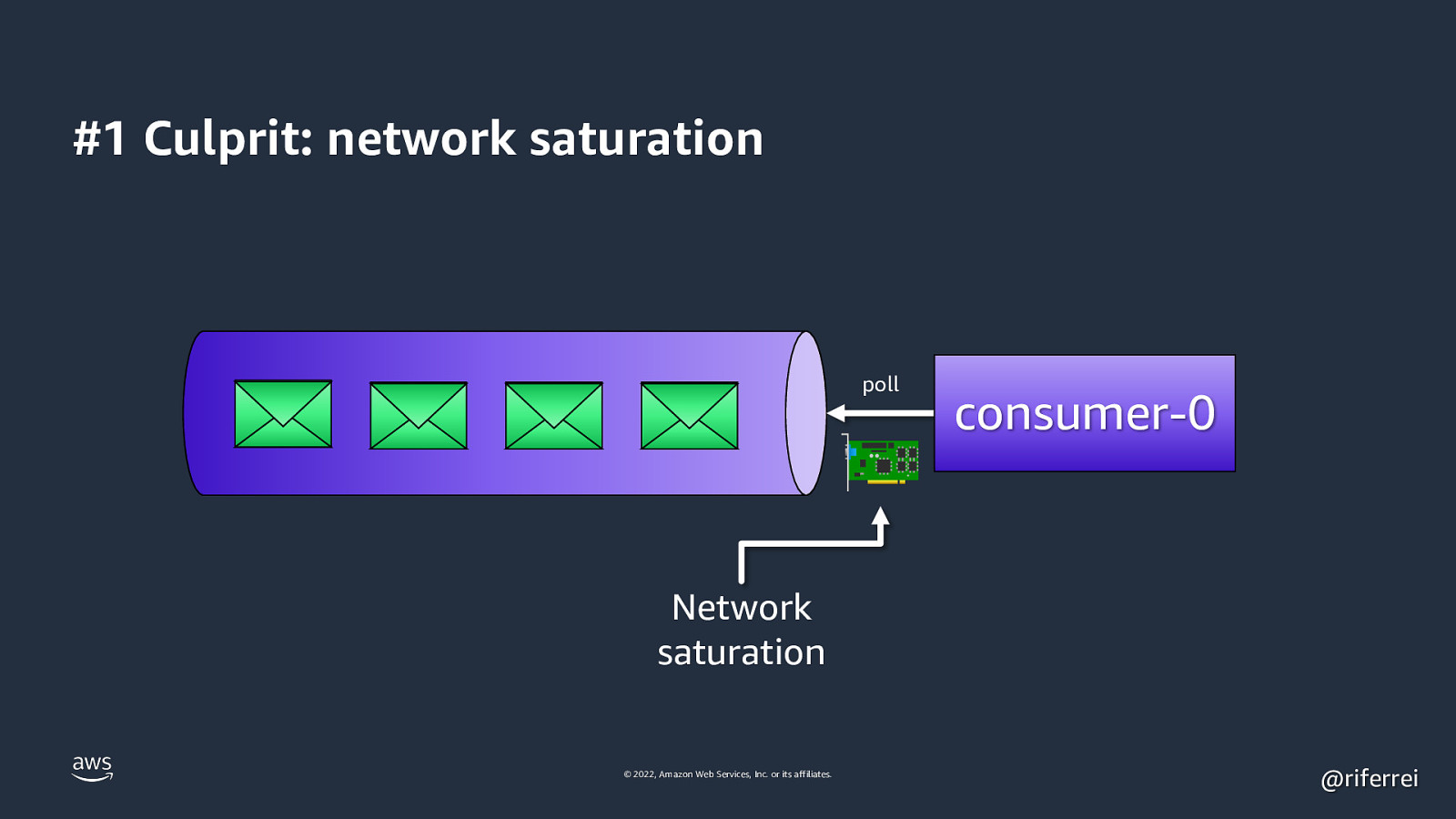

#1 Culprit: network saturation 10,000 poll consumer-0 events/sec Network saturation © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 15

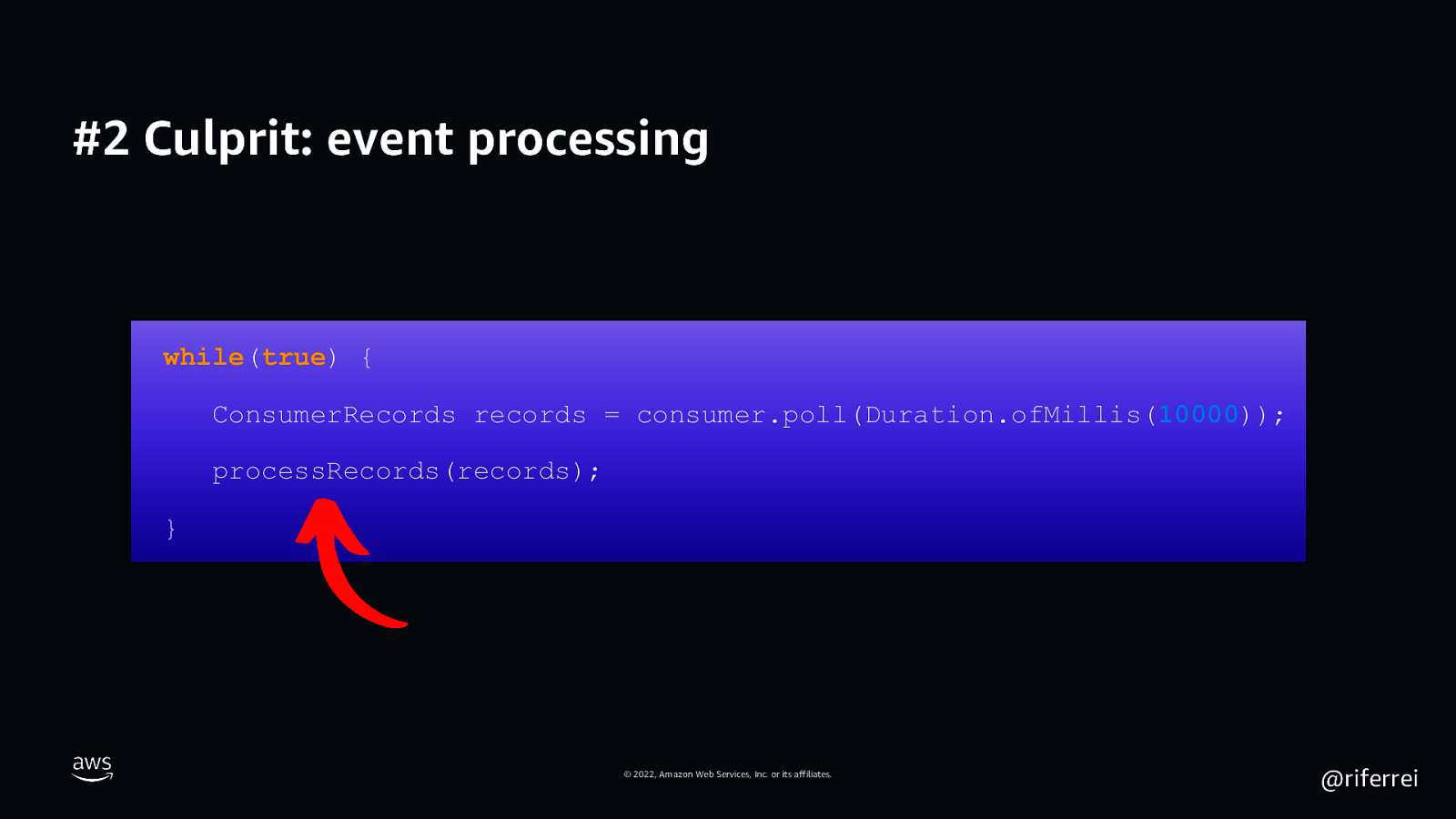

#2 Culprit: event processing while(true) { ConsumerRecords records = consumer.poll(Duration.ofMillis(10000)); processRecords(records); } © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 16

Event processing flow ⏱ Start Clock Event poll Deserialization Business logic © 2022, Amazon Web Services, Inc. or its affiliates. Offset commit ⏱ Finish Clock @riferrei

Slide 17

Event processing flow ⏱ Start Clock Event poll Deserialization Business logic Offset commit ⏱ Finish Clock Time that takes to transfer bytes from the partition to the consumer. © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 18

Event processing flow ⏱ Start Clock Event poll Deserialization Business logic Offset commit ⏱ Finish Clock Time that takes to convert the bytes into a specific format, such as JSON, Avro, Thrift, and ProtoBuf. © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 19

Event processing flow ⏱ Start Clock Event poll Deserialization Business logic Offset commit ⏱ Finish Clock Processing that takes place before writing the data off into a specific target, or simply dispose the data. © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 20

Event processing flow ⏱ Start Clock Event poll Deserialization Business logic Offset commit ⏱ Finish Clock Time that takes to send the offsets from the processed records back to the broker holding the partition. © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 21

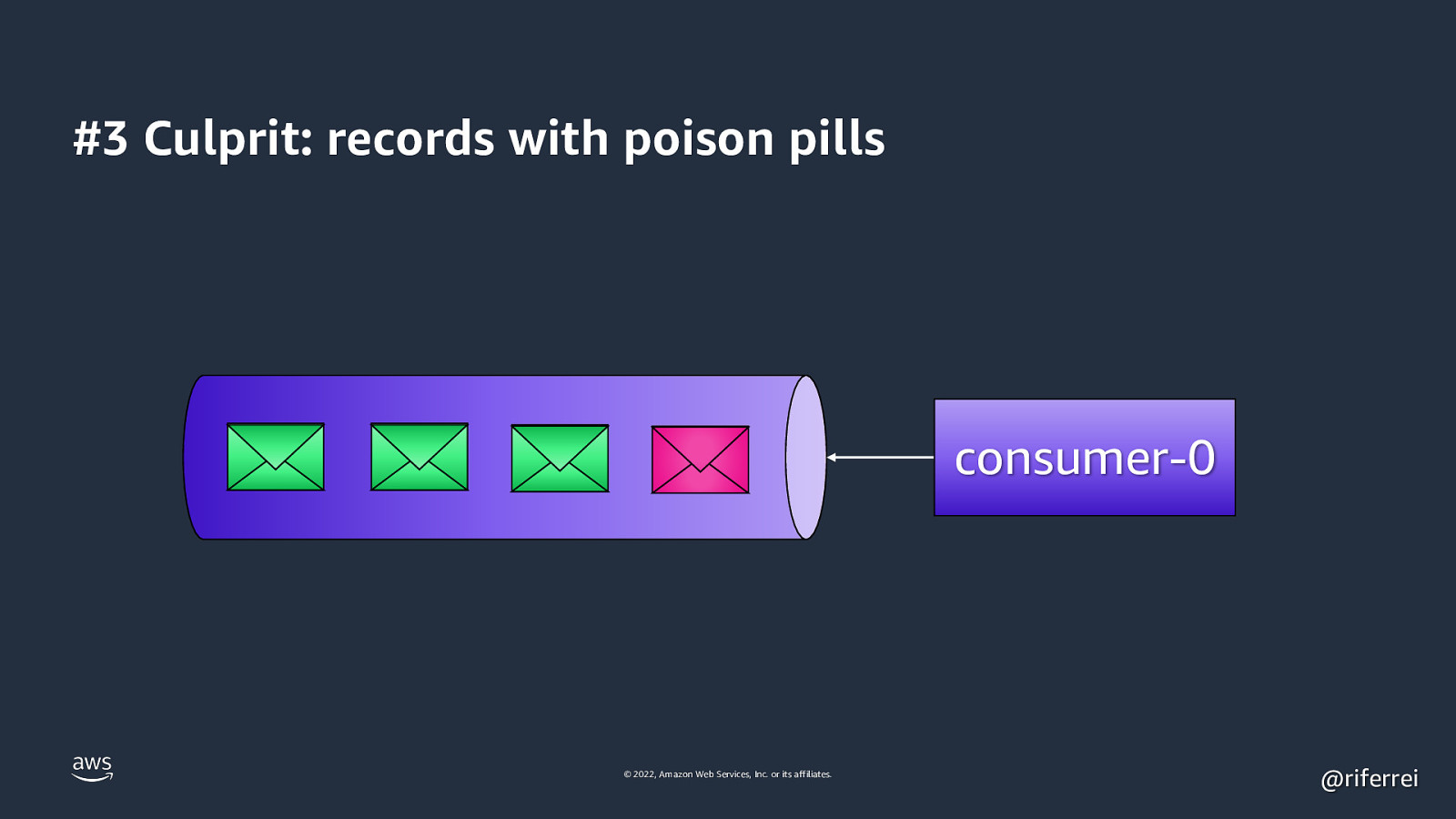

#3 Culprit: records with poison pills poll © 2022, Amazon Web Services, Inc. or its affiliates. consumer-0 @riferrei

Slide 22

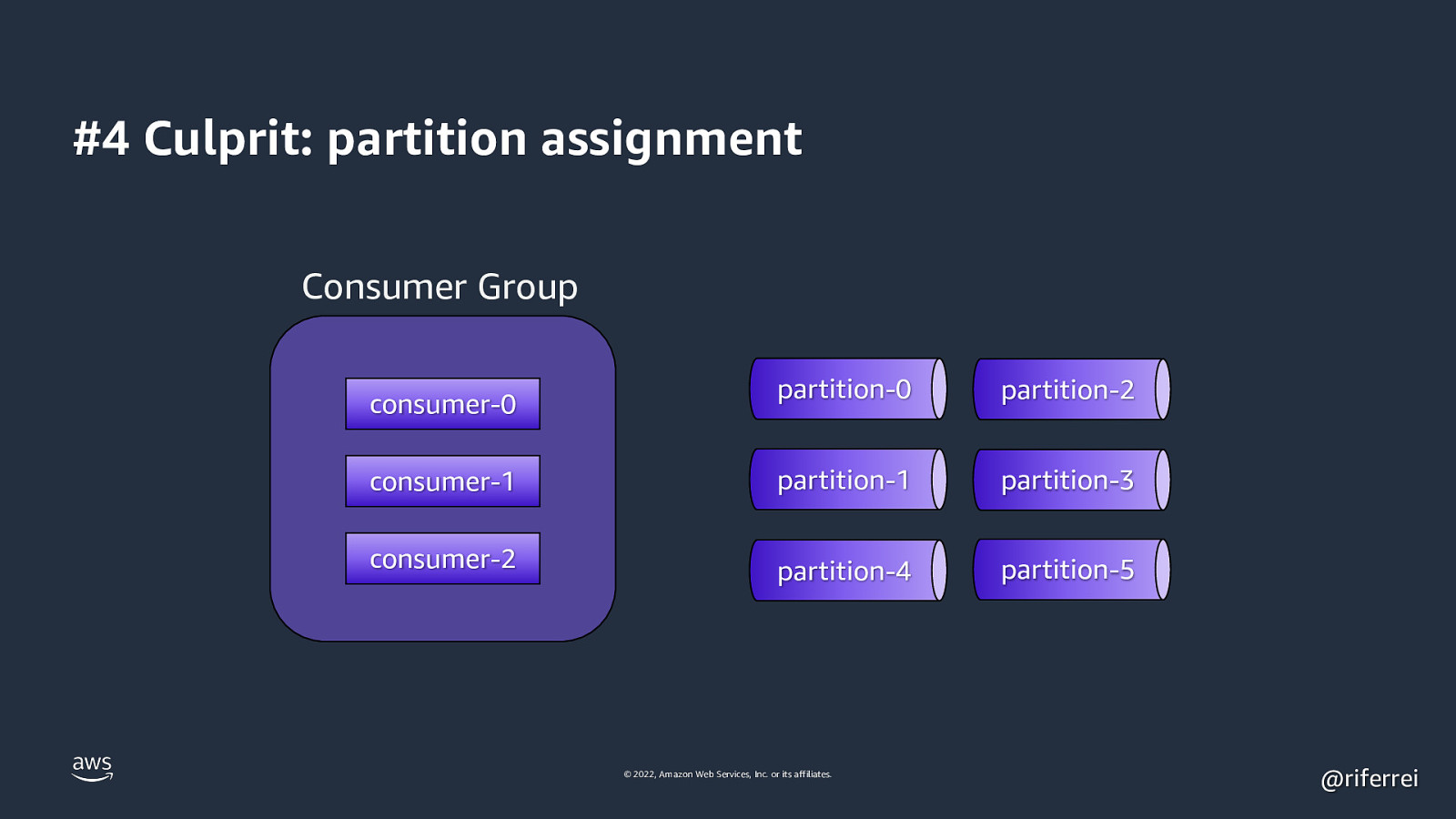

#4 Culprit: partition assignment Consumer Group partition-0 partition-2 consumer-1 partition-1 partition-3 consumer-2 partition-4 partition-5 consumer-0 © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 23

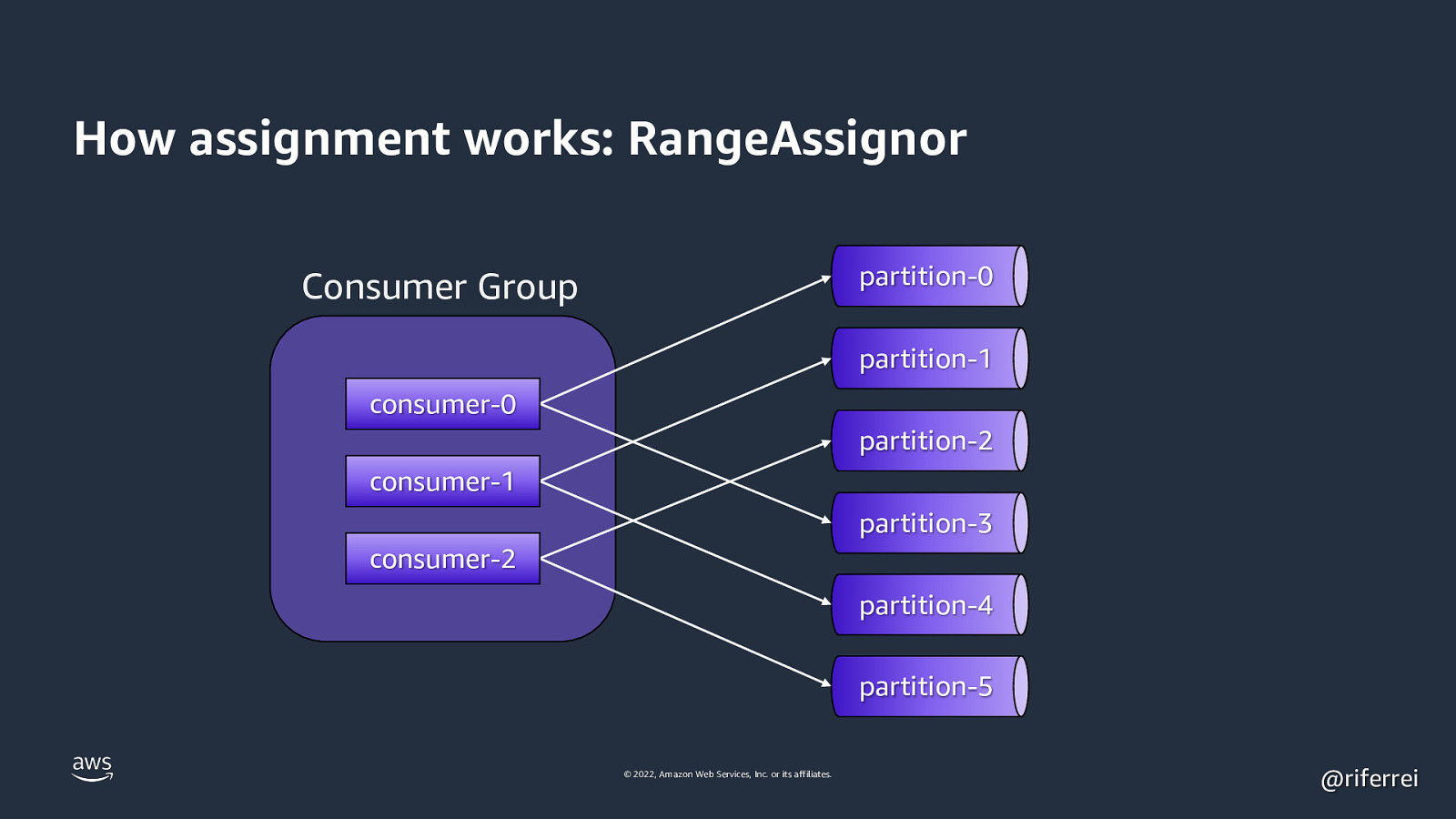

How assignment works: RangeAssignor partition-0 Consumer Group partition-1 consumer-0 partition-2 consumer-1 partition-3 consumer-2 partition-4 partition-5 © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 24

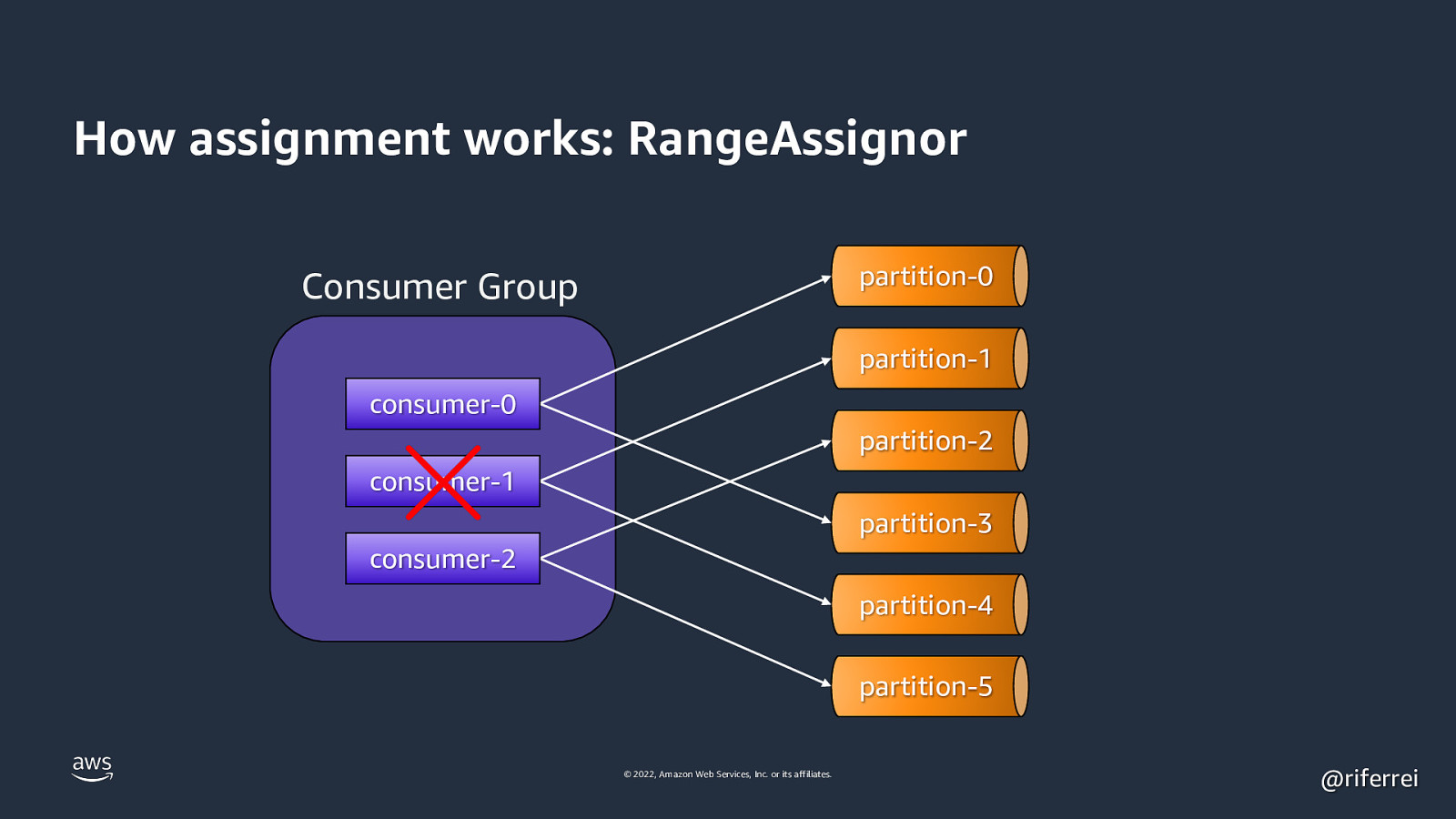

How assignment works: RangeAssignor partition-0 Consumer Group partition-1 consumer-0 partition-2 consumer-1 partition-3 consumer-2 partition-4 partition-5 © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 25

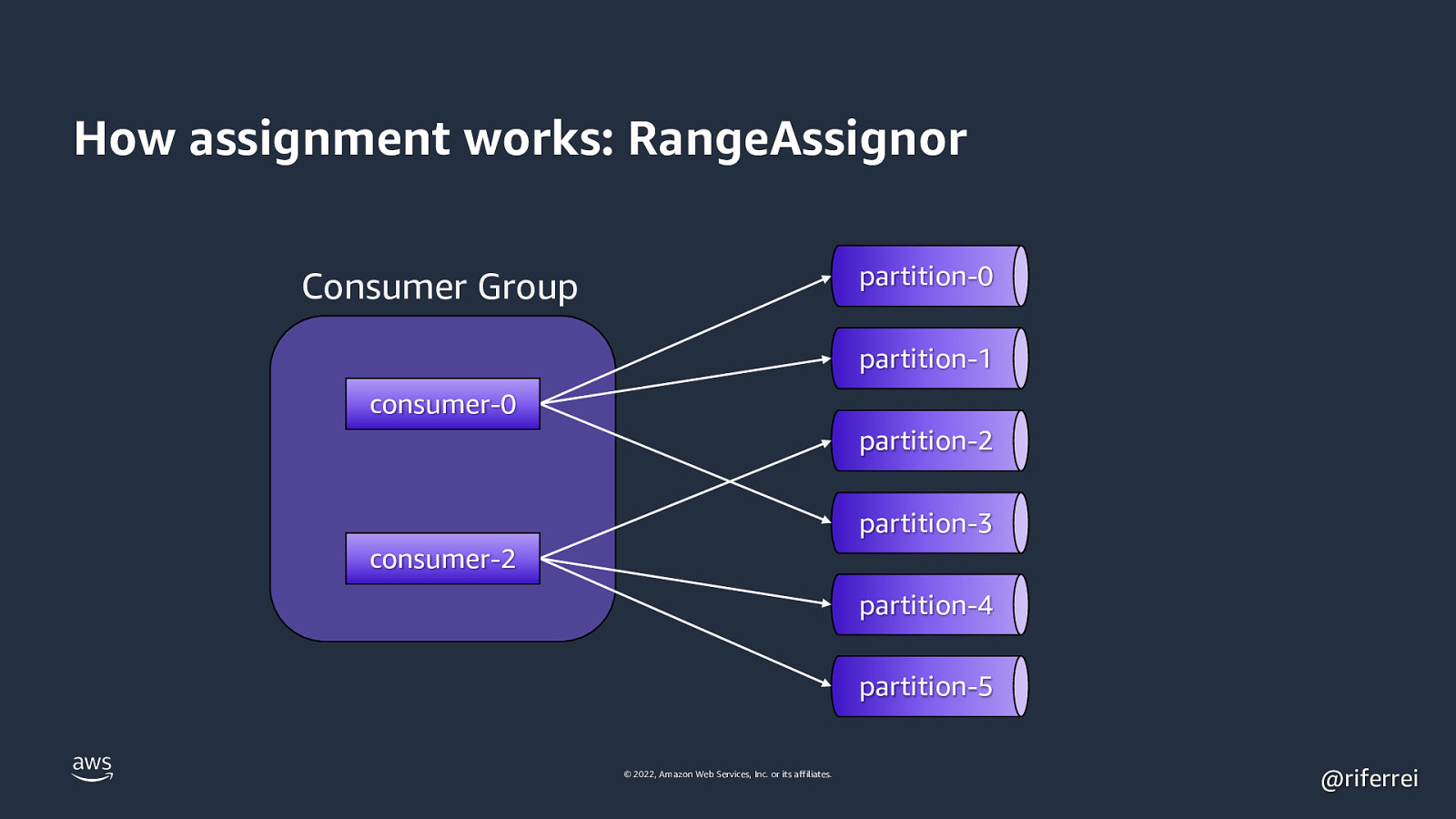

How assignment works: RangeAssignor partition-0 Consumer Group partition-1 consumer-0 partition-2 partition-3 consumer-2 partition-4 partition-5 © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 26

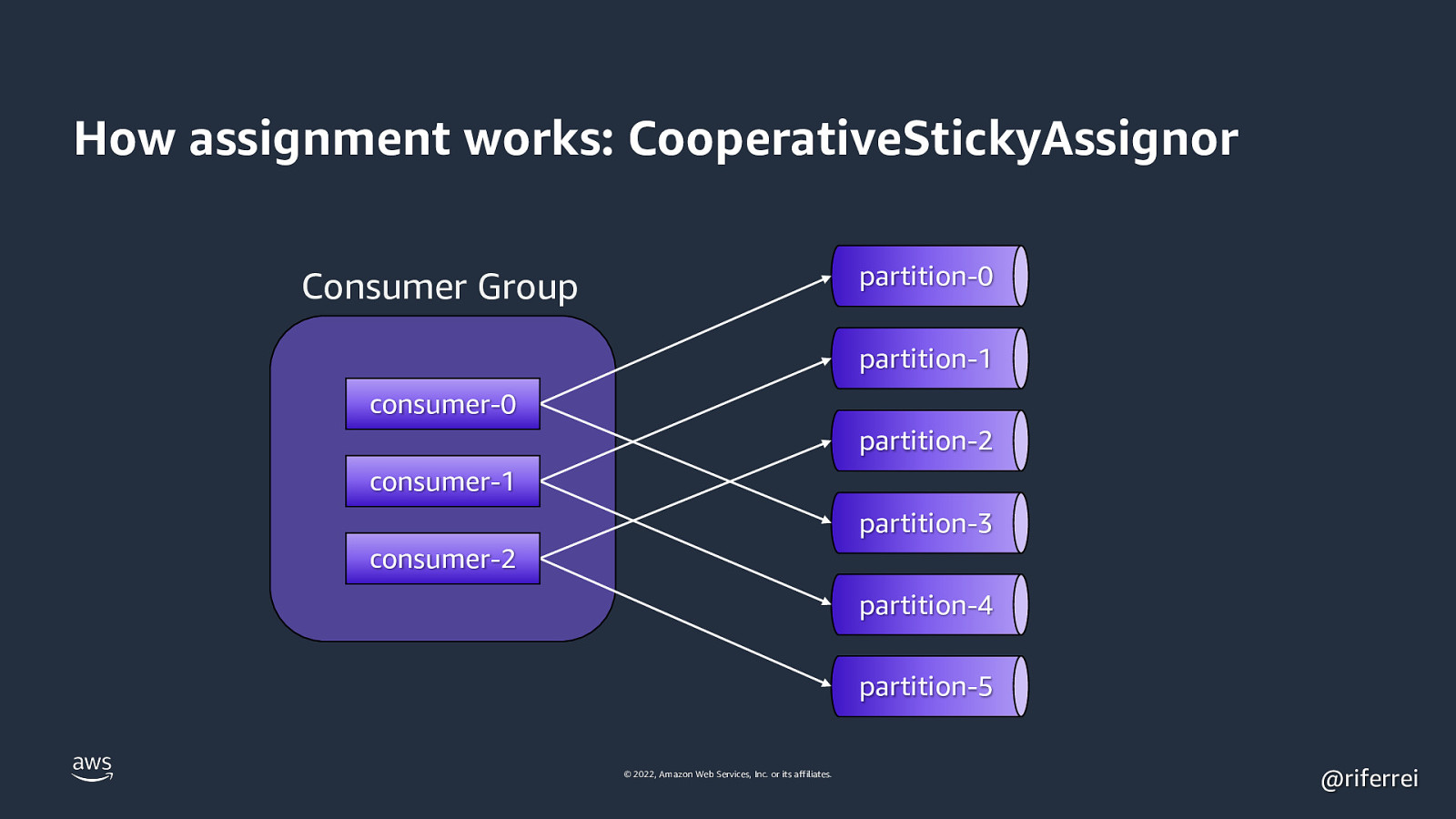

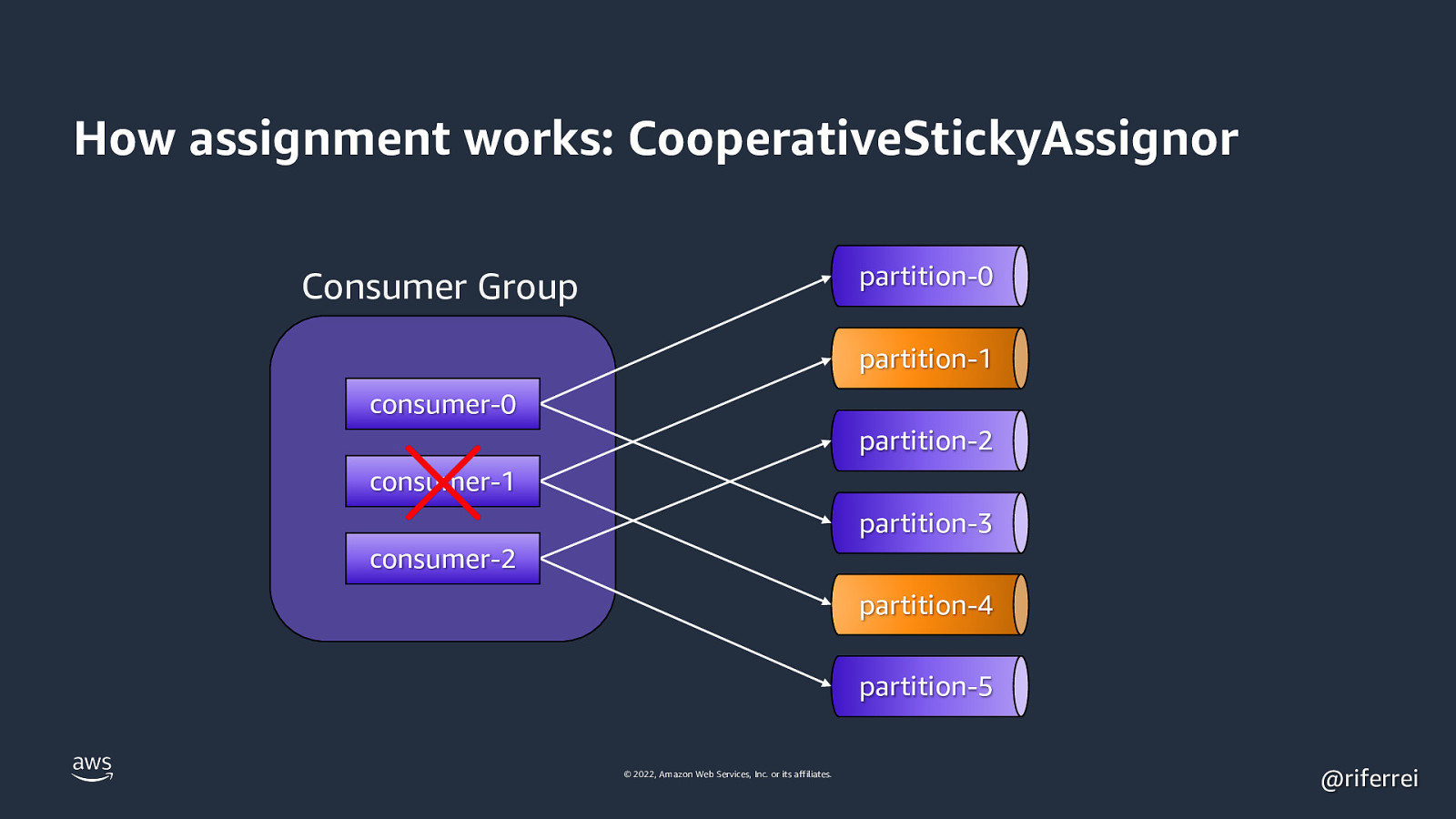

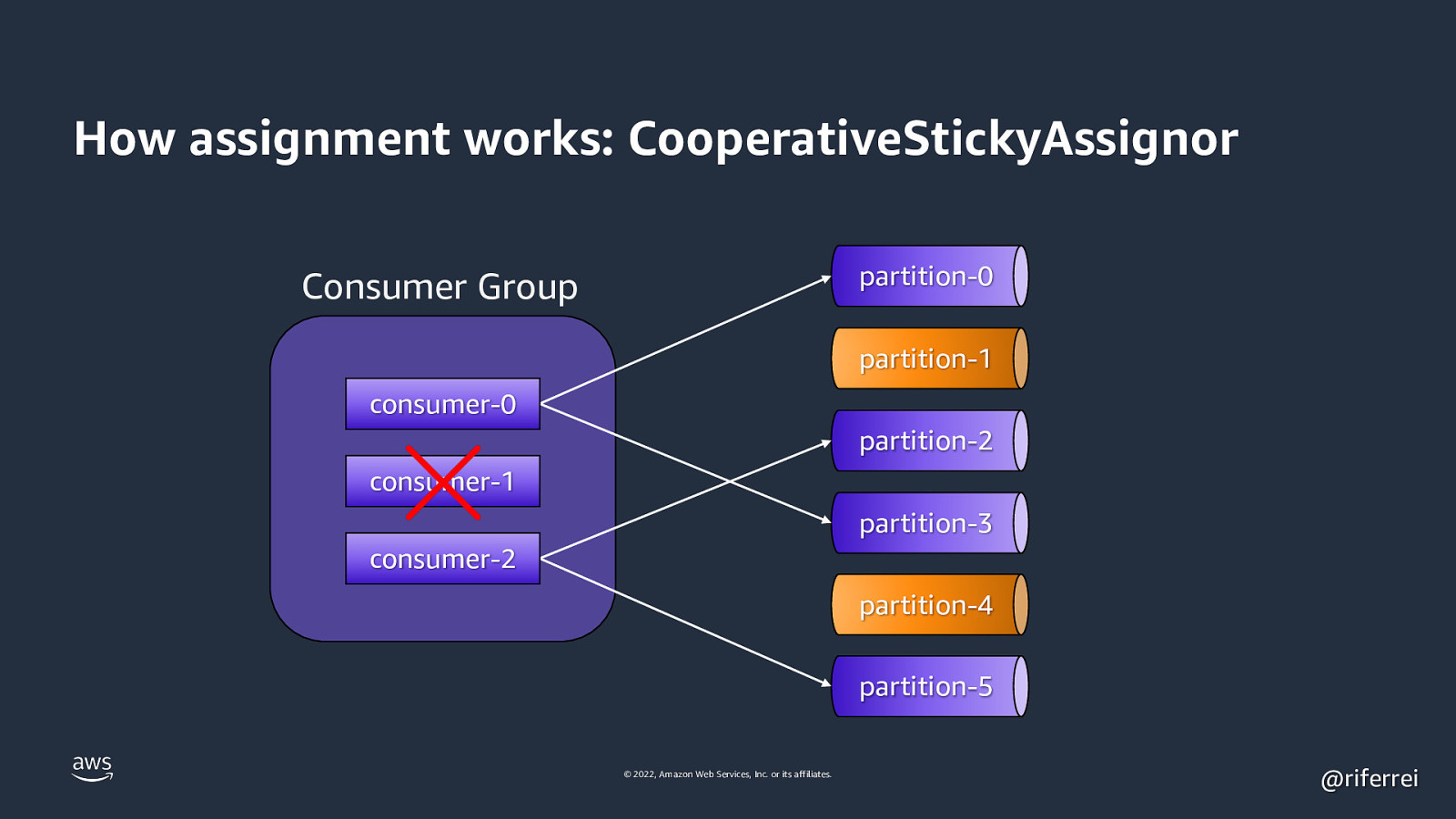

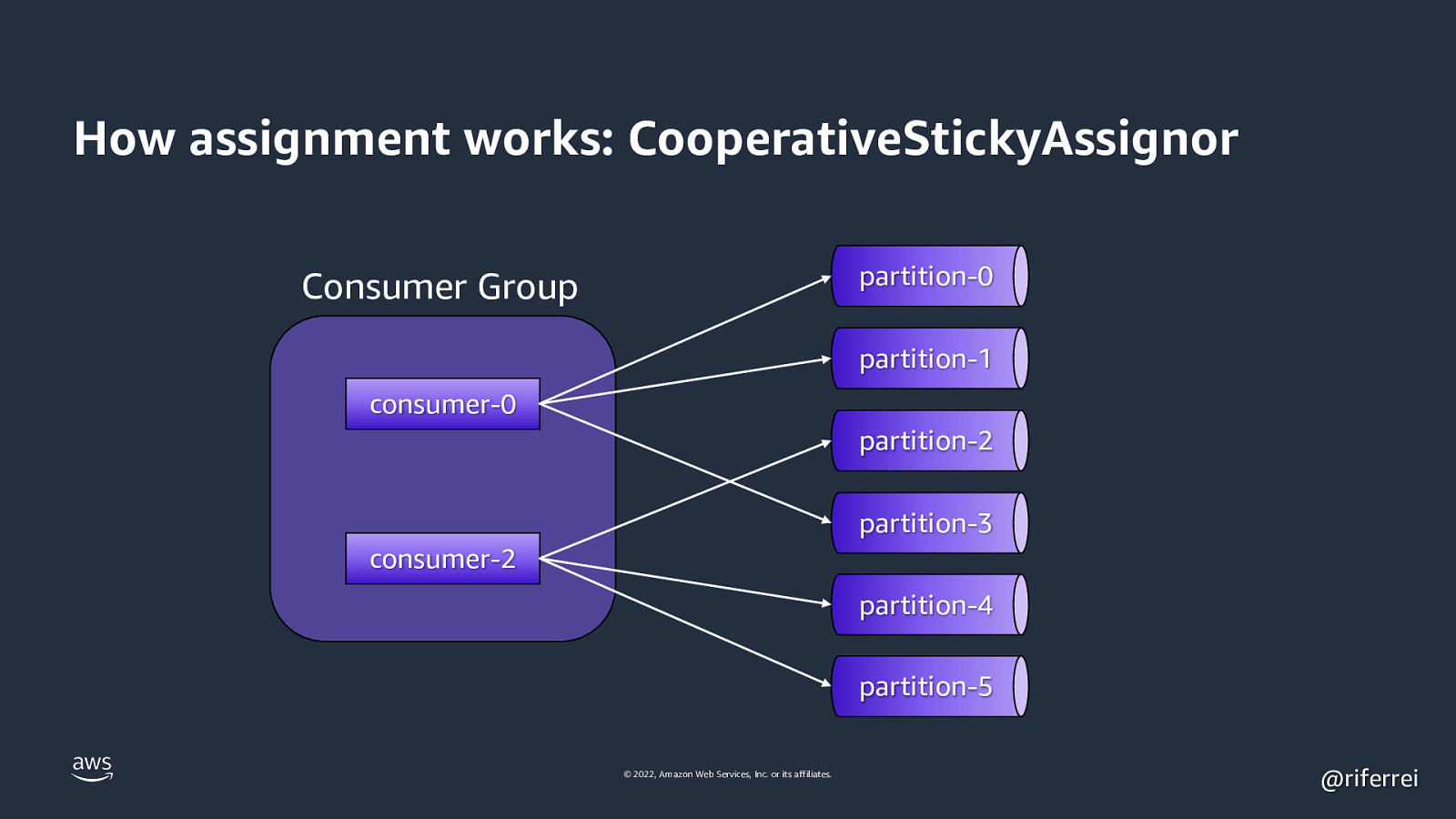

How assignment works: CooperativeStickyAssignor partition-0 Consumer Group partition-1 consumer-0 partition-2 consumer-1 partition-3 consumer-2 partition-4 partition-5 © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 27

How assignment works: CooperativeStickyAssignor partition-0 Consumer Group partition-1 consumer-0 partition-2 consumer-1 partition-3 consumer-2 partition-4 partition-5 © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 28

How assignment works: CooperativeStickyAssignor partition-0 Consumer Group partition-1 consumer-0 partition-2 consumer-1 partition-3 consumer-2 partition-4 partition-5 © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 29

How assignment works: CooperativeStickyAssignor partition-0 Consumer Group partition-1 consumer-0 partition-2 partition-3 consumer-2 partition-4 partition-5 © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 30

#5 Culprit: data partitioning Producer Send(event) © 2022, Amazon Web Services, Inc. or its affiliates. Broker @riferrei

Slide 31

“When no keys are specified, data is spread over partitions using round-robin. Otherwise, records with the same key are written in the same partition.” — Most developers out there © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 32

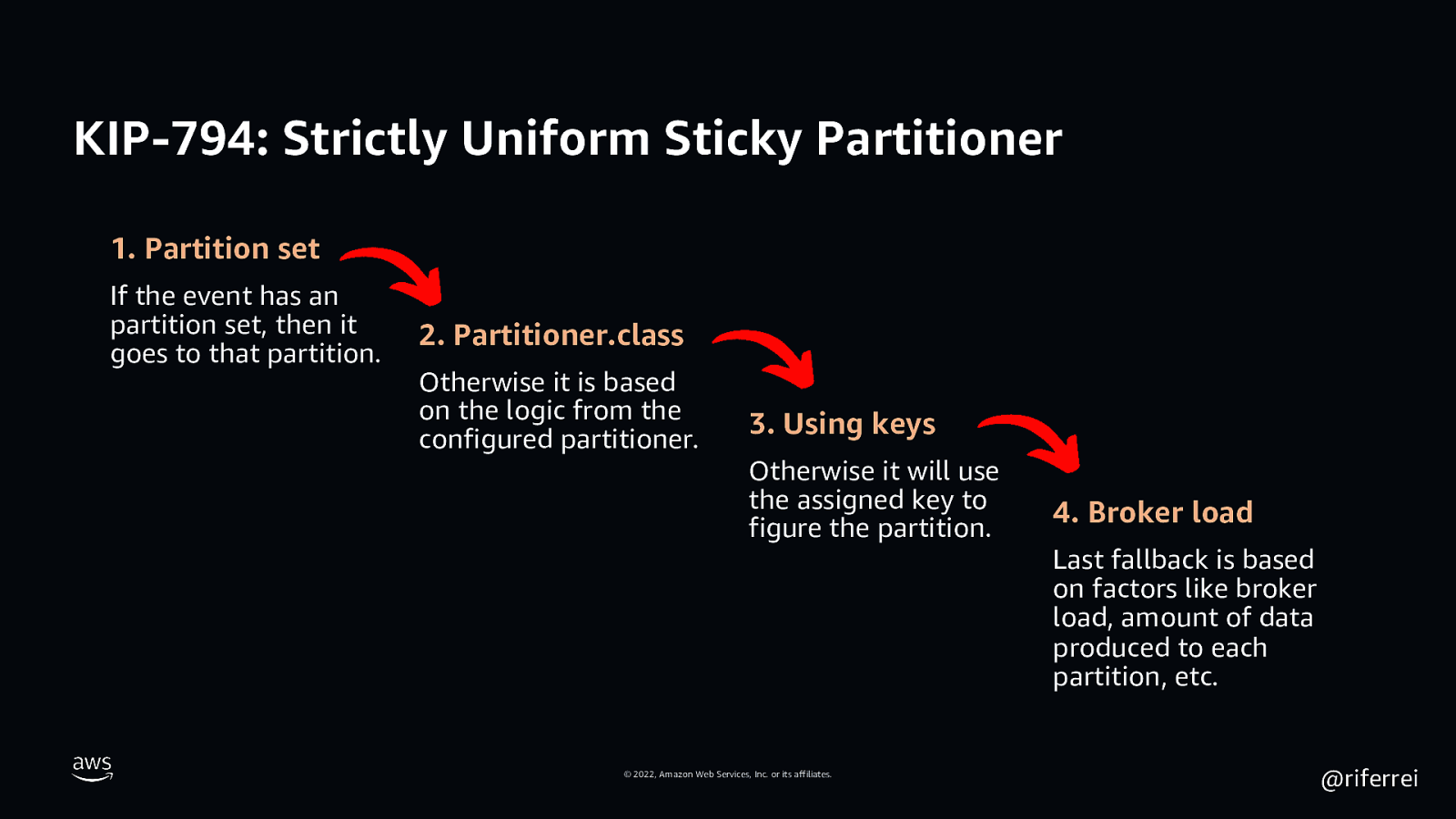

KIP-794: Strictly Uniform Sticky Partitioner 1. Partition set If the event has an partition set, then it goes to that partition. 2. Partitioner.class Otherwise it is based on the logic from the configured partitioner. 3. Using keys Otherwise it will use the assigned key to figure the partition. © 2022, Amazon Web Services, Inc. or its affiliates. 4. Broker load Last fallback is based on factors like broker load, amount of data produced to each partition, etc. @riferrei

Slide 33

Partitions as unit of storage © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 34

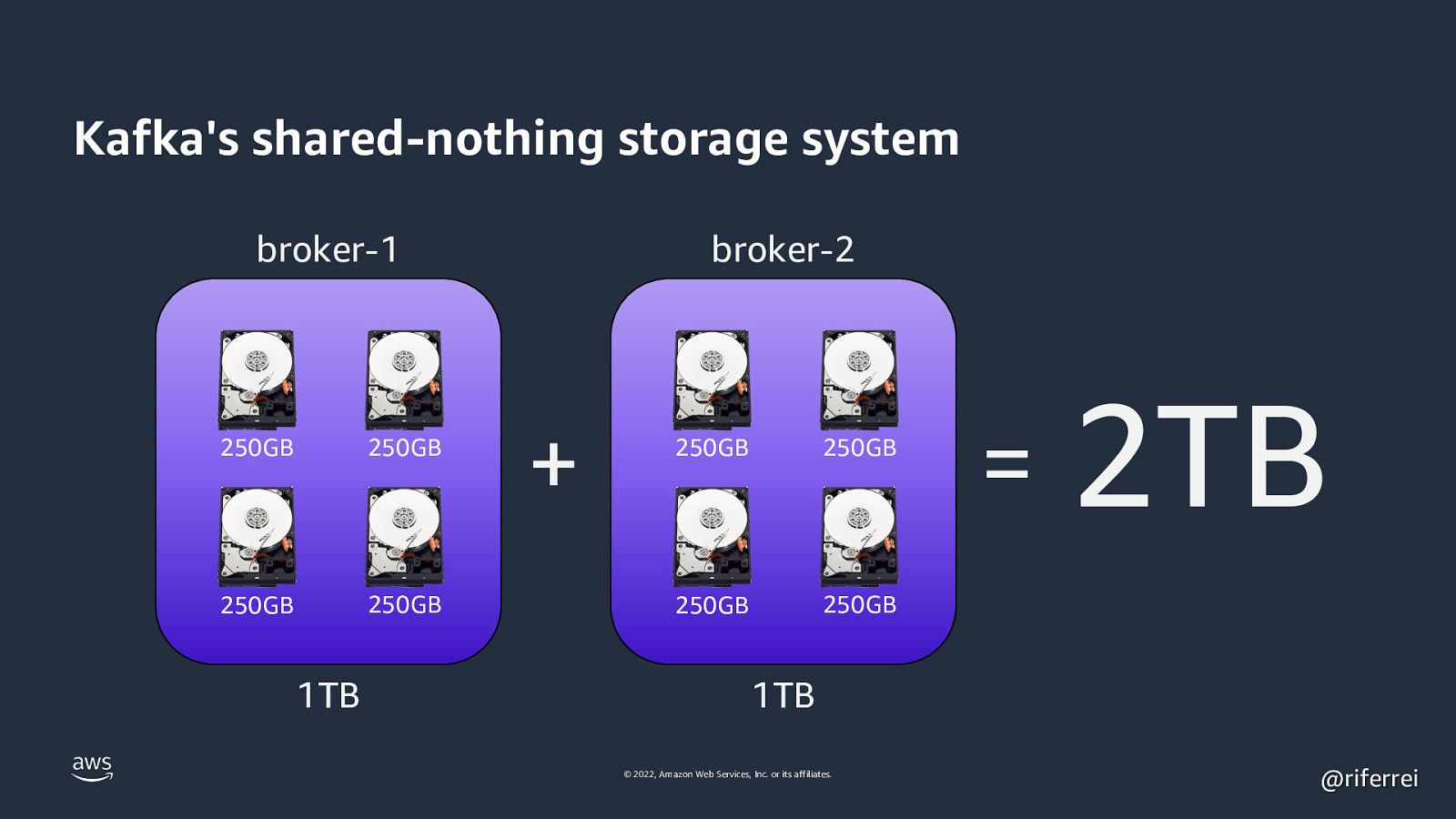

Kafka’s shared-nothing storage system broker-1 250GB 250GB 250GB 250GB 1TB broker-2 + 250GB 250GB 250GB 250GB

2TB 1TB © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 35

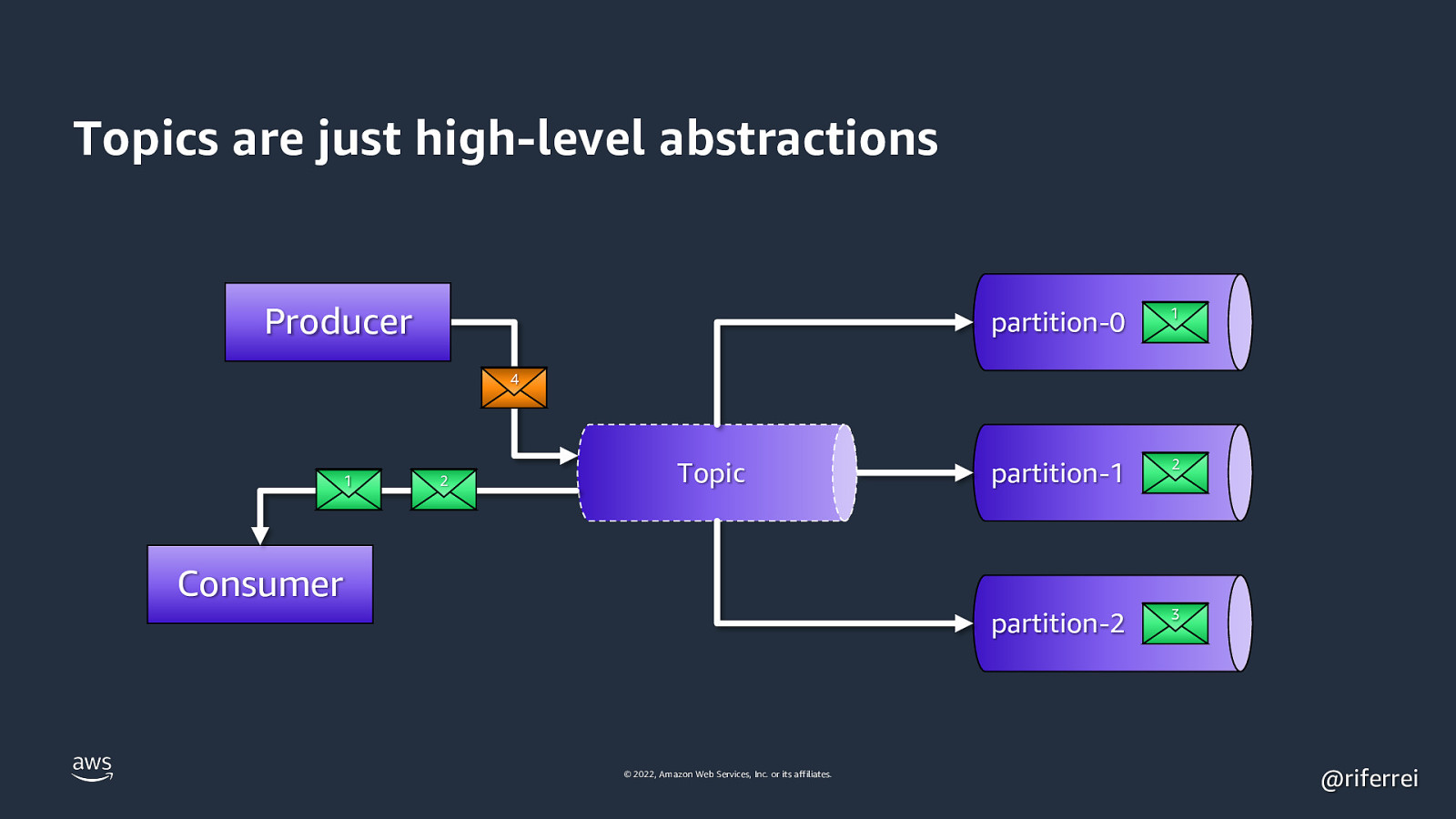

Topics are just high-level abstractions Producer partition-0 1 partition-1 2 partition-2 3 4 1 2 Topic Consumer © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 36

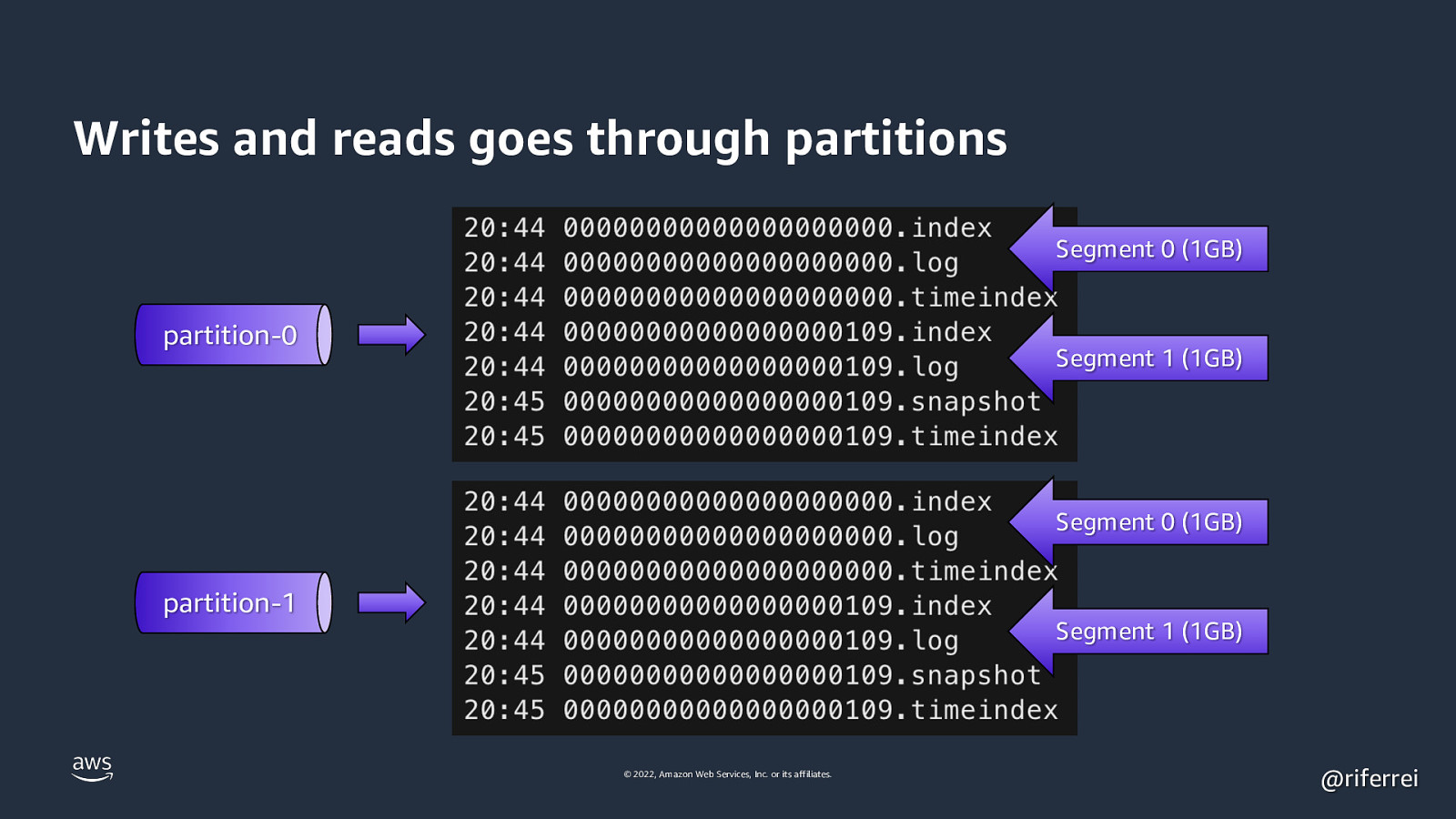

Writes and reads goes through partitions Segment 0 (1GB) partition-0 Segment 1 (1GB) Segment 0 (1GB) partition-1 Segment 1 (1GB) © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 37

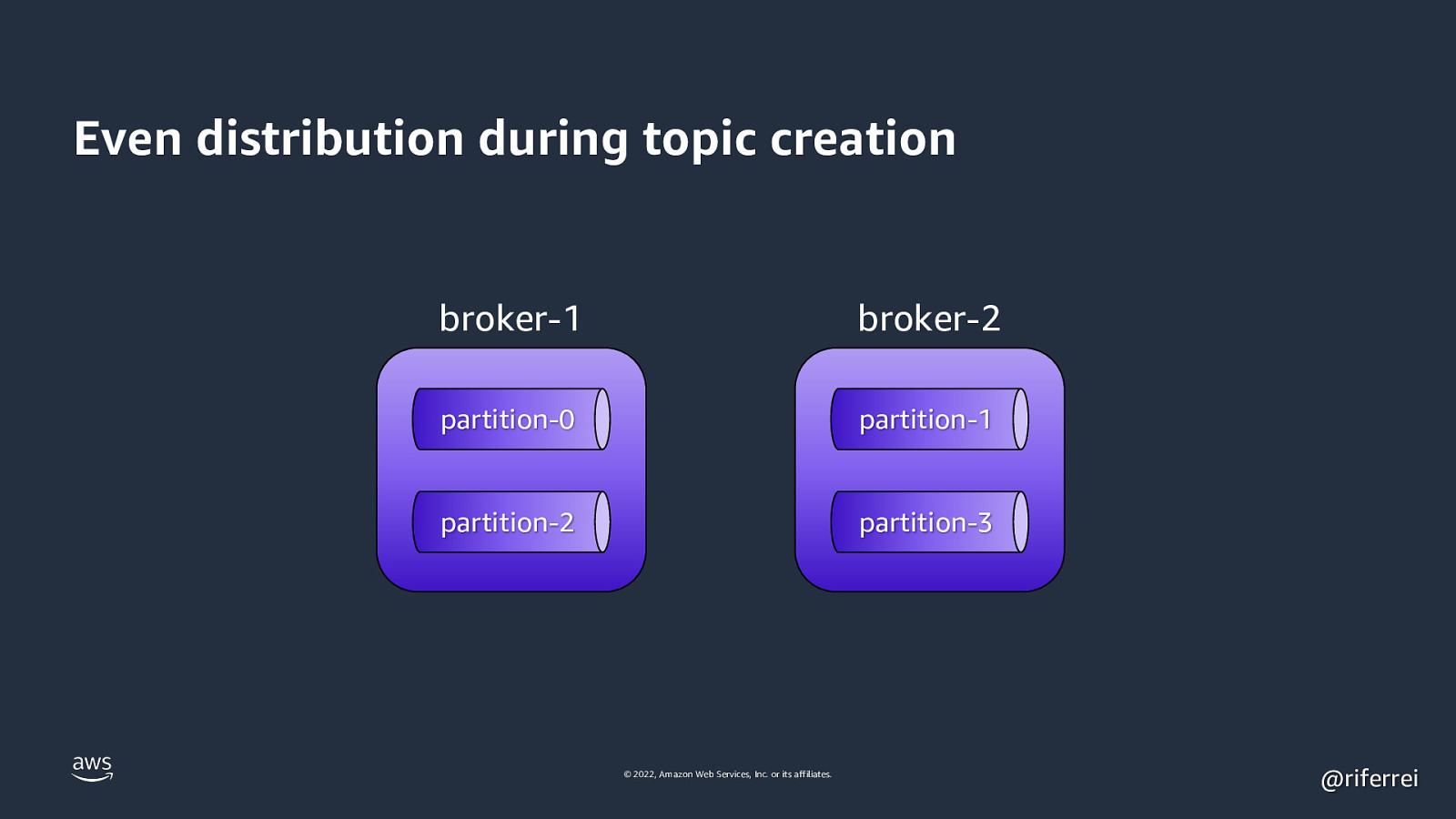

Even distribution during topic creation broker-1 broker-2 partition-0 partition-1 partition-2 partition-3 © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 38

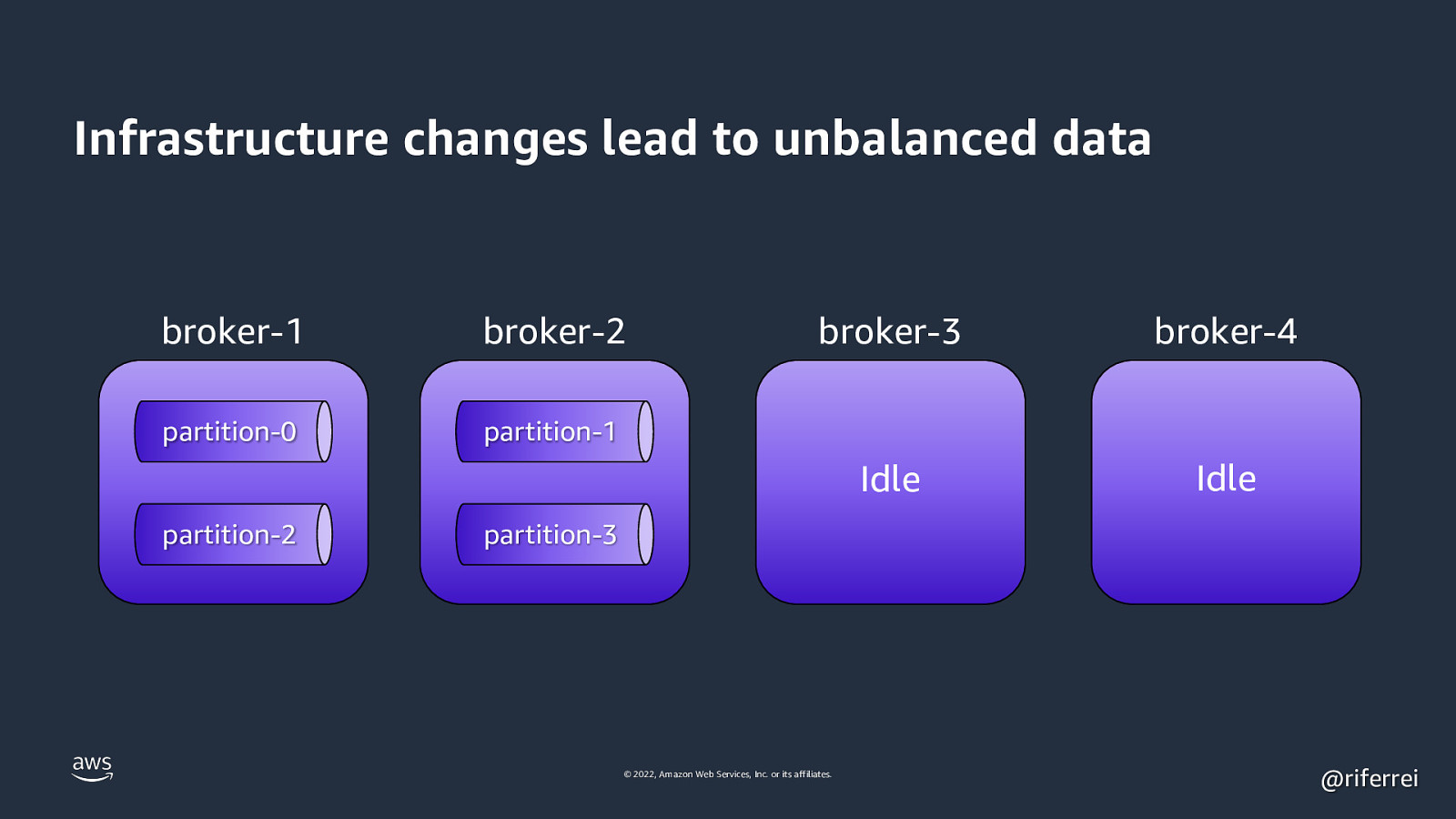

Infrastructure changes lead to unbalanced data broker-1 broker-2 partition-0 partition-1 partition-2 broker-3 broker-4 Idle Idle partition-3 © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 39

How to reassign the partitions? 1. Bounce the entire cluster: leads to unavailability but once its back the partitions are reassigned. 2. kafka-reassign-partitions: CLI tool you where you can reassign the partitions with the cluster online. © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 40

Partitions as unit of durability © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 41

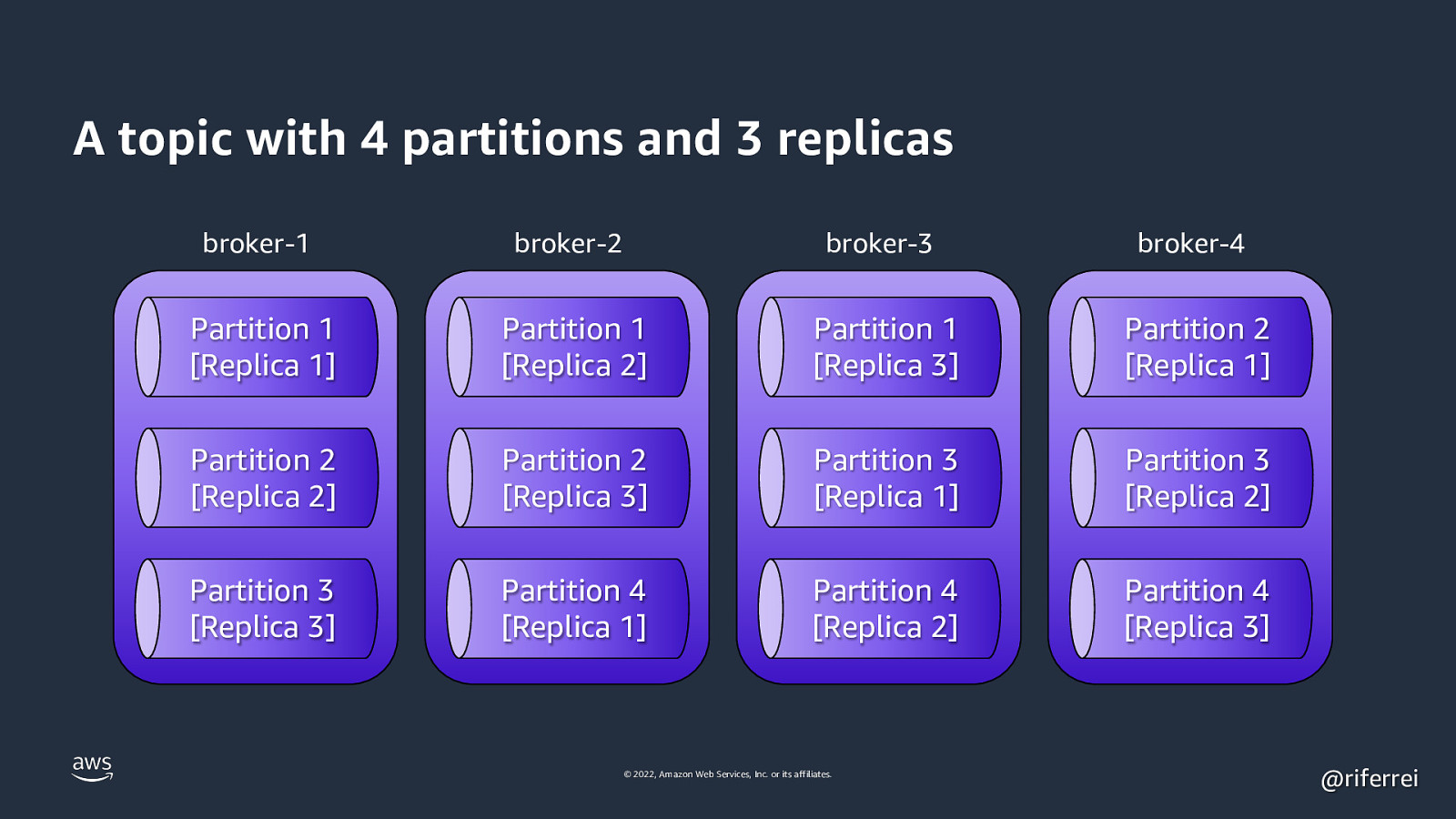

A topic with 4 partitions and 3 replicas broker-1 broker-2 broker-3 broker-4 Partition 1 [Replica 1] Partition 1 [Replica 2] Partition 1 [Replica 3] Partition 2 [Replica 1] Partition 2 [Replica 2] Partition 2 [Replica 3] Partition 3 [Replica 1] Partition 3 [Replica 2] Partition 3 [Replica 3] Partition 4 [Replica 1] Partition 4 [Replica 2] Partition 4 [Replica 3] © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 42

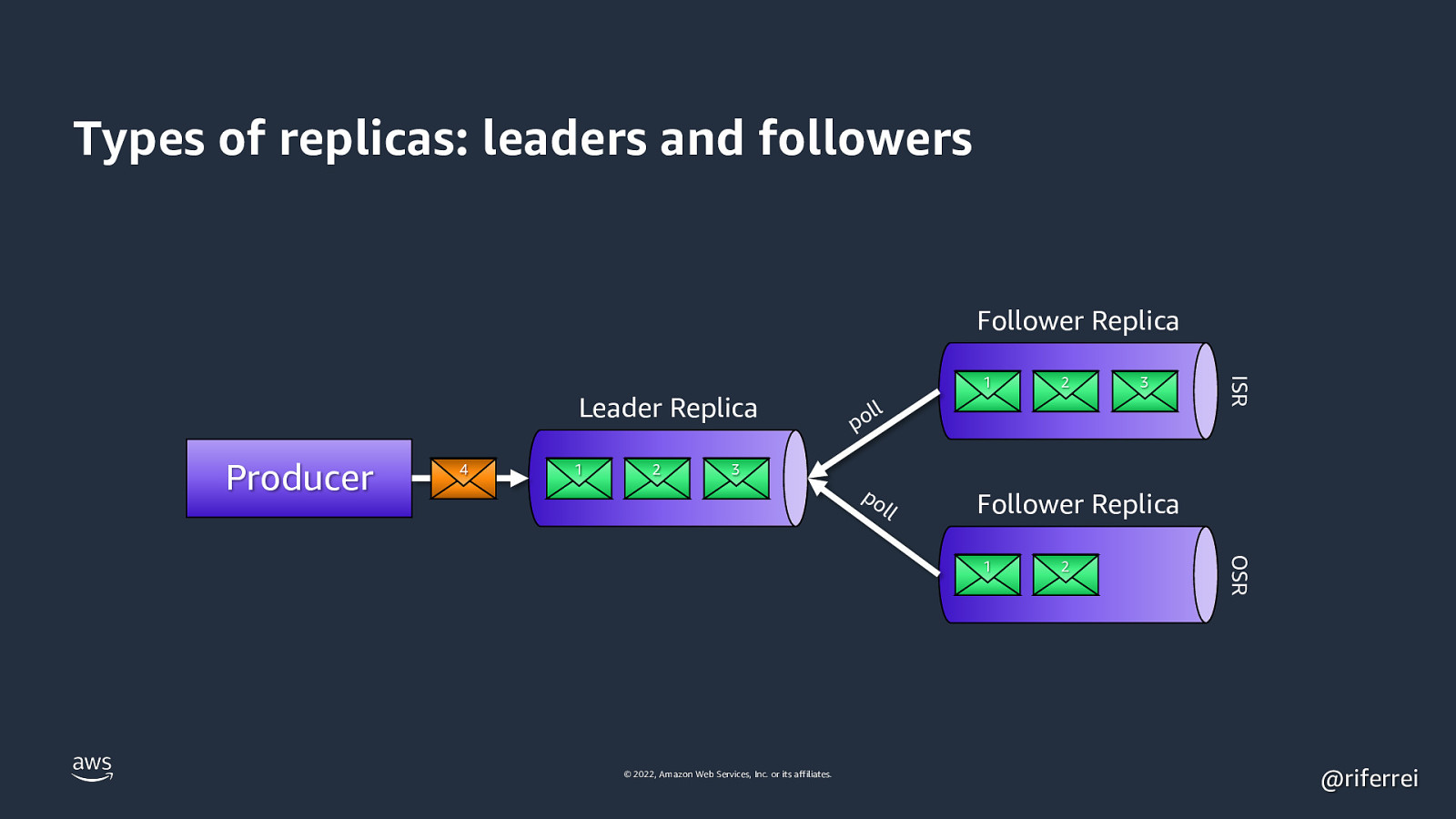

Types of replicas: leaders and followers Follower Replica Producer 4 1 2 2 3 ll po ISR Leader Replica 1 3 po l l Follower Replica © 2022, Amazon Web Services, Inc. or its affiliates. 2 OSR 1 @riferrei

Slide 43

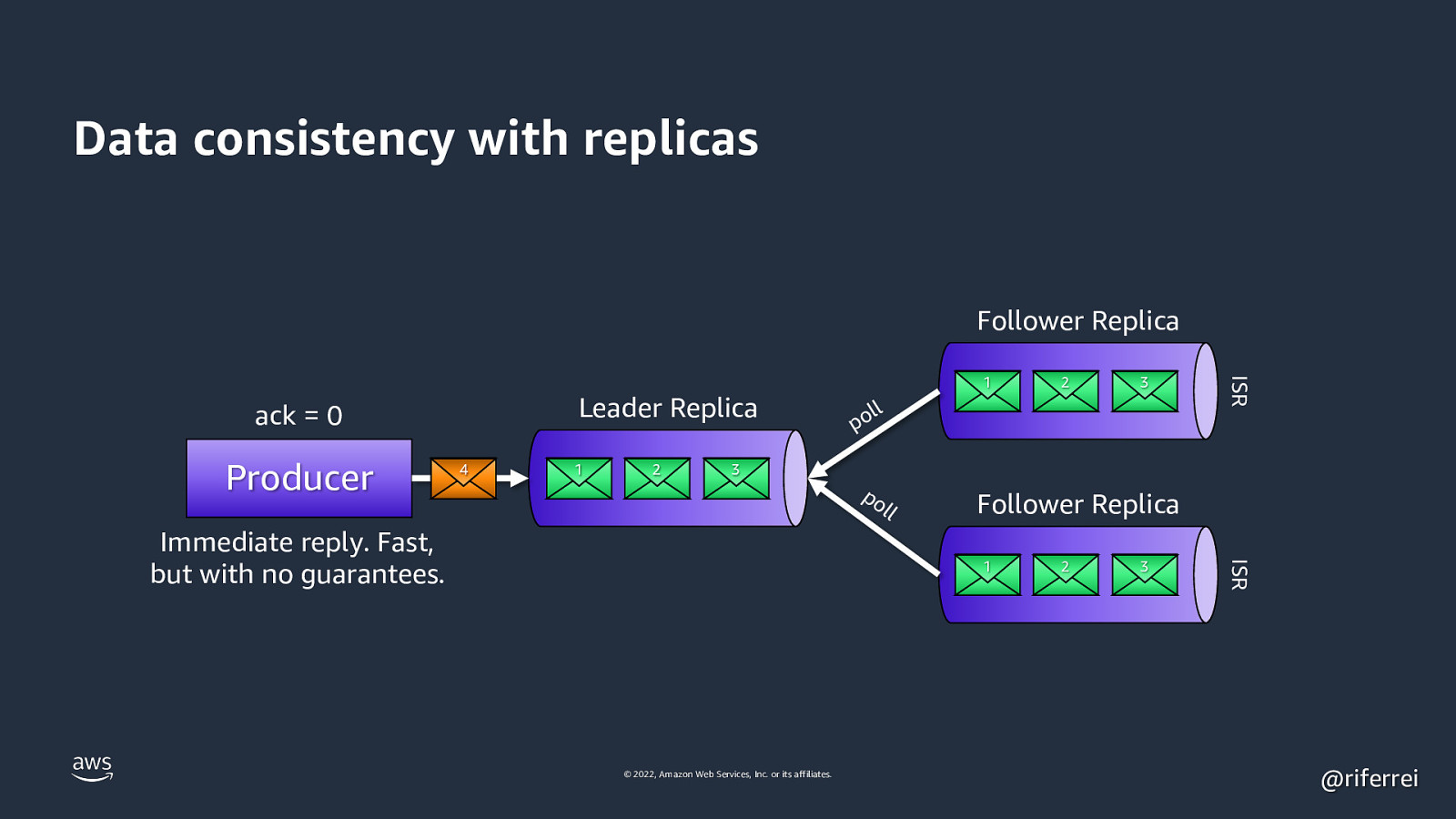

Data consistency with replicas Follower Replica Producer 4 1 2 2 3 ll po ISR Leader Replica ack = 0 1 3 po l Follower Replica 1 © 2022, Amazon Web Services, Inc. or its affiliates. 2 3 ISR Immediate reply. Fast, but with no guarantees. l @riferrei

Slide 44

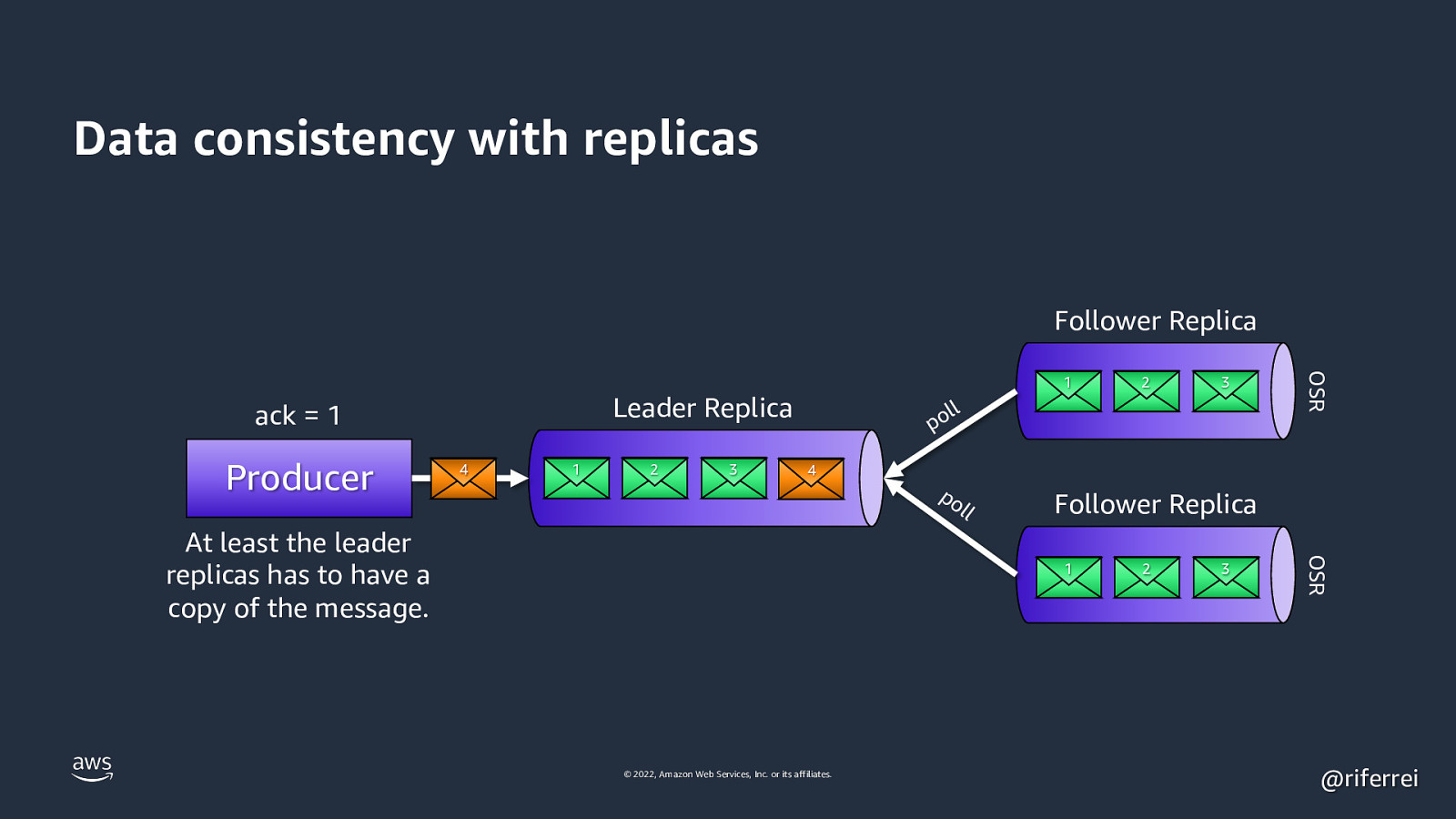

Data consistency with replicas Follower Replica 4 1 2 3 2 3 ll po 4 l Follower Replica 1 © 2022, Amazon Web Services, Inc. or its affiliates. 2 3 OSR At least the leader replicas has to have a copy of the message. po l OSR Leader Replica ack = 1 Producer 1 @riferrei

Slide 45

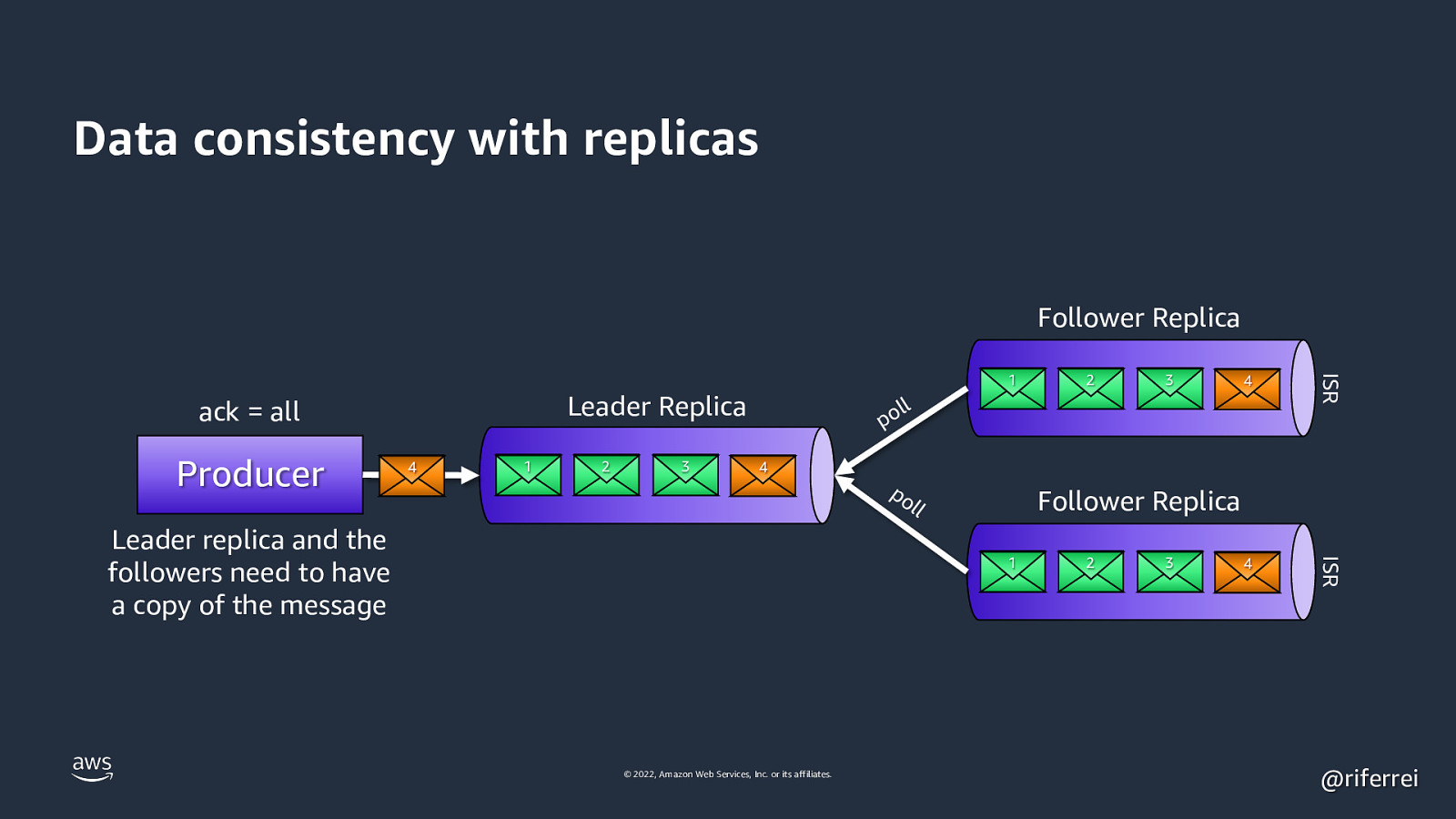

Data consistency with replicas Follower Replica Producer 4 1 2 3 3 4 4 ISR Leader Replica ack = all 2 ISR 1 ll po 4 Leader replica and the followers need to have a copy of the message po l Follower Replica l 1 © 2022, Amazon Web Services, Inc. or its affiliates. 2 3 @riferrei

Slide 46

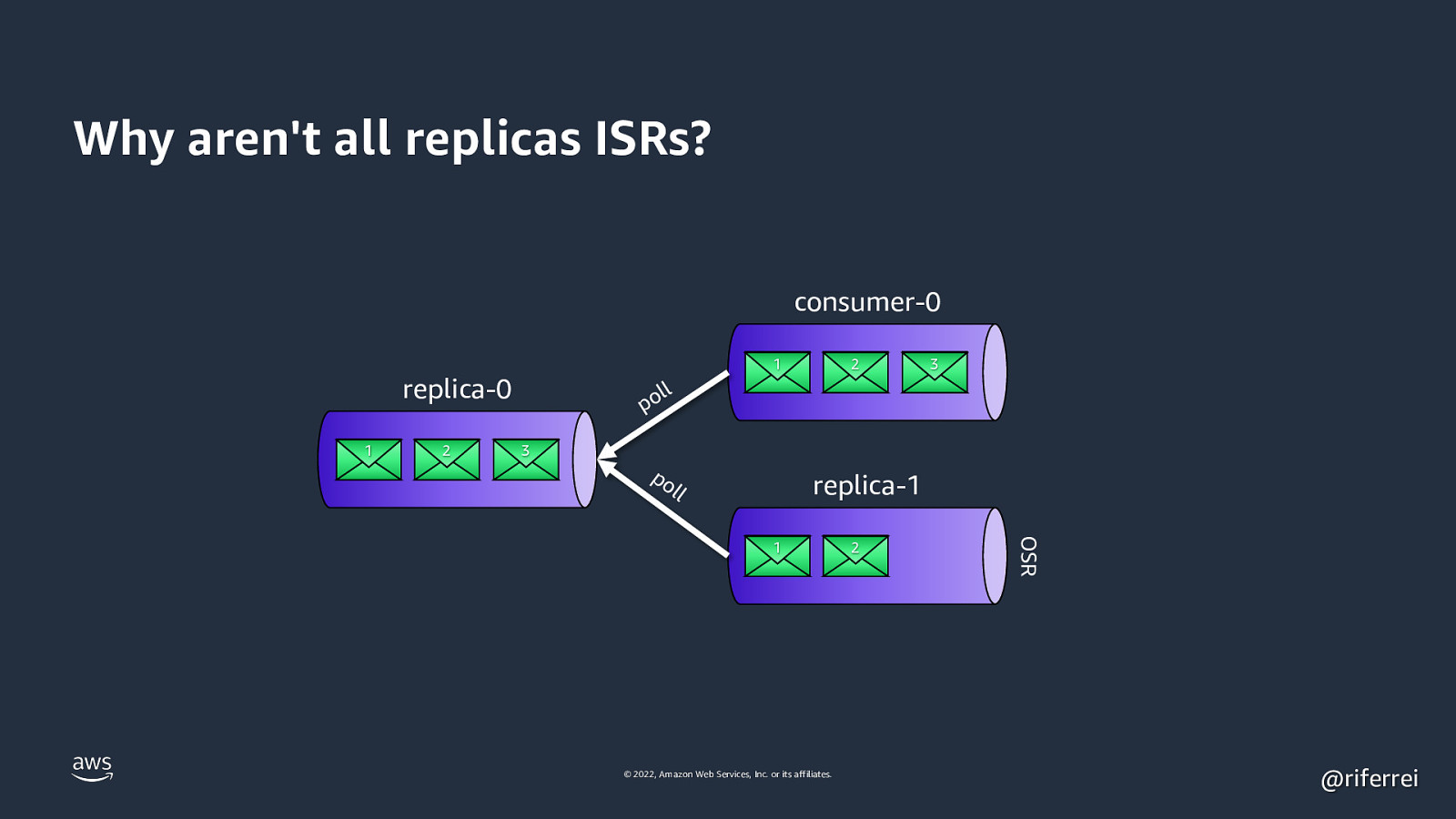

Why aren’t all replicas ISRs? consumer-0 1 replica-0 1 2 2 3 ll po 3 po l replica-1 l © 2022, Amazon Web Services, Inc. or its affiliates. 2 OSR 1 @riferrei

Slide 47

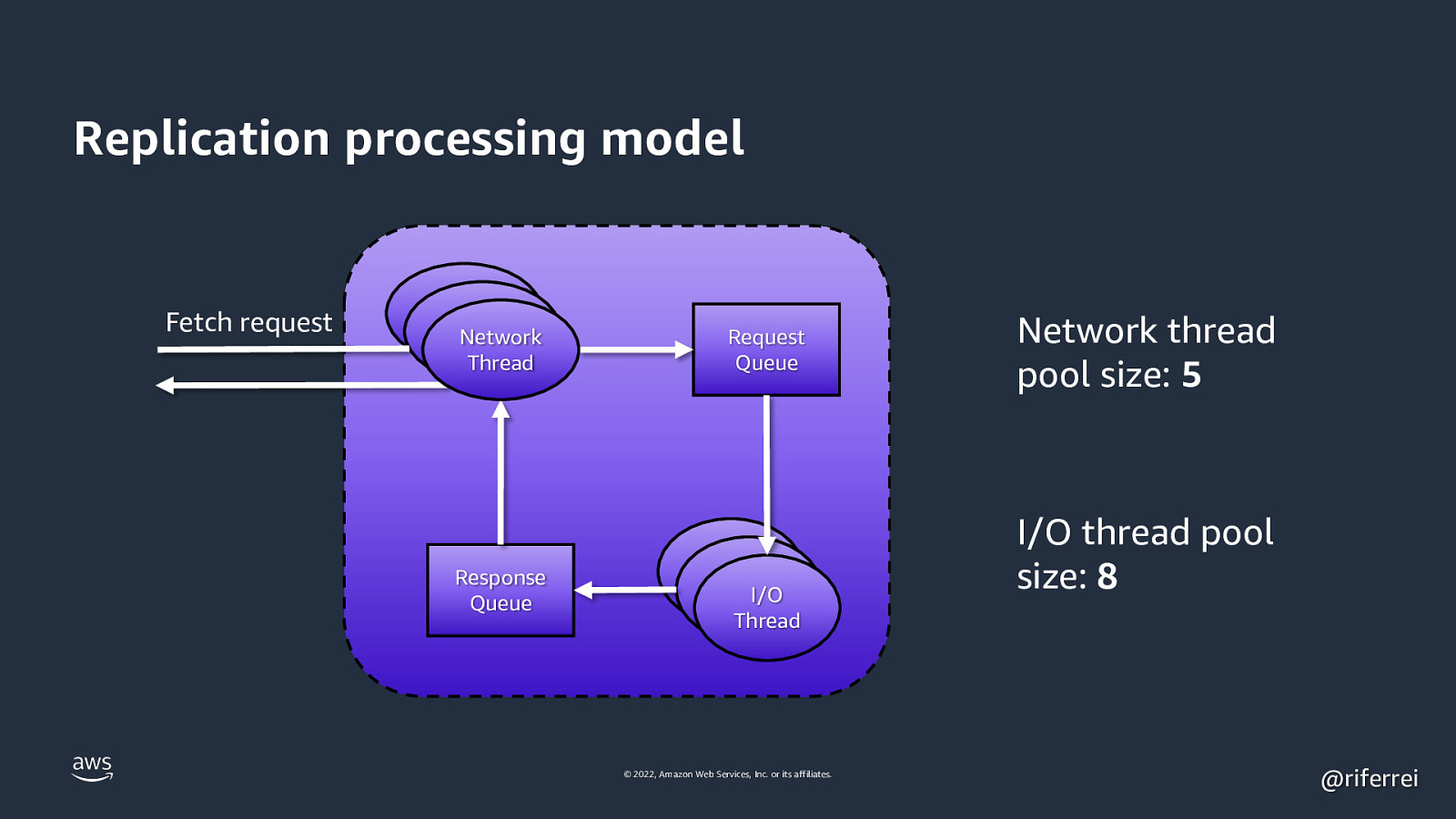

Replication processing model Fetch request Network Thread Response Queue Request Queue Accepto Accepto r Thread I/O r Thread Thread © 2022, Amazon Web Services, Inc. or its affiliates. Network thread pool size: 5 I/O thread pool size: 8 @riferrei

Slide 48

I wrote everything I showed here in this blog post: https://www.buildon.aws/posts/in-the-land-of-the-sizing-the-one-partition-kafka-topic-is-king/01-what-are-partitions/ © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei

Slide 49

Thank you! Ricardo Ferreira @riferrei © 2022, Amazon Web Services, Inc. or its affiliates. © 2022, Amazon Web Services, Inc. or its affiliates. @riferrei